DevSecOps in the Enterprise: 6 Classic Ways to Manage Security at Speed and Scale

Enterprise organisations are increasingly looking to adopt DevOps to build and run their software to maintain their competitive advantage. They are often burdened with legacy technology, onerous processes and lack the skills internally to easily adapt to these new methods of delivering and running software at speed and scale.

This is challenging enough, but when compounded with tightly regulated operating conditions, the ability to adapt is made more difficult. Maintaining compliance in an environment in which change is introduced at ever greater speed and scale requires new ways to manage security and compliance. With this in mind, a set of practices, tools and mindset is emerging, suitably termed ‘DevSecOps’.

In this blog post, I will be introducing principles and practices to manage security at scale and speed.

1. Implement Decoupled Microservice Architectures

Managing security spans the entire software delivery lifecycle (SDLC) and starts with good architecture principles and patterns.

In recent years, microservice architectures have emerged to help deliver smaller increments of change more frequently, reducing the functional footprint of individual components. This pattern and increased delivery cadence has both introduced opportunity and challenges when managing the security of these services.

Microservices are typically run inside containers and deployed across a container-based PaaS. Enabling encryption between microservices can be fully automated even when endpoints and versions are changing at high frequencies and new services are being released.

By developing reusable reference architectures for delivery teams to consume, the delta of change between implementations across teams is reduced. This in itself narrows the scope of what needs to be tested in the build and run stages of the SDLC for each microservice.

There is an obvious compromise between re-usability and autonomy of delivery teams to make decisions on implementation. Having reusable architectures should not restrict delivery teams in making decisions that are a delta from an established pattern but should also allow for teams to adopt a pattern they know is robust and battle tested. This is where the feedback loop between delivery and enterprise architecture needs to be closed. If a delivery team wants to deviate, they should be empowered to do so.

2. Embed Architecture and Security Individuals in your Delivery Teams

To empower delivery teams to deviate from established patterns, they need architecture capability in the delivery teams. The established solution architecture role in enterprises today is becoming less relevant as the time to market for delivering innovative changes is being drastically shortened by modern software delivery practices. Modern day architects need to be hands on and able to guide delivery from within the delivery function itself. With this in place, delivery teams can then work directly with technical architects to both deviate from reference architectures and feedback up into enterprise architecture. This enables both top-down and bottom-up maintenance of patterns and practices. In some cases, such as adopting new technologies and platforms, it’s critical that this top-down and bottom-up approach is adopted.

The same approach applies to the infosec function as part of delivery. Where the delivery team decides on a different approach to established patterns in the enterprise security architecture, they can work with embedded infosec analysts within the delivery function itself to gain consensus from within on the viability and risk profile of the change.

Hands-on capability also applies to your infosec function. Having hands-on knowledge of modern development and automation platforms is key so that they are able to provide the low-level direction when considering change in terms of risks and how these can be mitigated.

There are finite resources available in all enterprises such that, when embedding this capability in delivery, these individuals may need to work across more than one delivery team. When adopting anything fundamentally new to the enterprise, such as public cloud, these people should be embedded 100% of the time. Once the first iteration of adoption has been completed, the learnings can then be shared with other teams and those people can work across multiple teams.

3. Change your Approval Processes

Following on from empowering your delivery teams to pivot from established patterns where real value to the business can be returned, your approval processes also need to change. There is little point empowering delivery if it then needs to wait for a change board to convene at times that are at odds with the delivery velocity of the team itself. Between technical architects, infosec analysts and developers, consensus can be reached on the viability of change and delivery teams should be allowed to proceed at acceptable risk.

Why bother employing skilled individuals if you’re not going to empower them to make decisions? If the enterprise architecture and owners of risk need to approve the changes, let this happen retrospectively.

4. Employ Talented People

This may sound obvious, but it is critical if you want to manage security at scale and speed. Good engineers have a better chance of writing secure code. Good engineers understand the opportunity and threats when operating in fast-moving environments. If you are unwilling to meet the demands of talented people both in working conditions and rates of pay, you need to also accept the impact this has when looking at empowering them to make the right delivery choices.

5. Educate

There are always varying abilities in delivery teams. There should be established leadership with the experience to lead the team and make appropriate decisions. There are also less experienced, future superstars in the team. Across all levels of ability and experience, the need to educate your teams in security best practice is key. Engineers need to be aware of new attack vectors that come to light with the ever increasing breadth of technology. They need to know how to operate and develop securely, but they also need to know why.

One of my colleagues at Contino once said to me that good development is all about thought and good habits. Part of this thinking and habits must include security considerations. By educating around practices and the rationale behind them, you are empowering your teams with the how and, more importantly, the why.

6. Automate

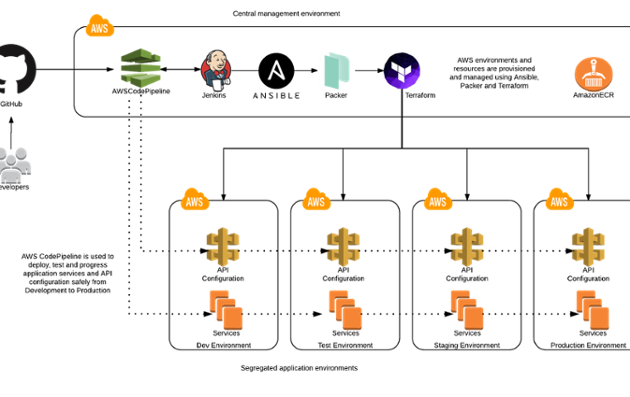

The proliferation of applications and services within modern day organisations requires automation to underpin the build and run stages of software delivery. It is near impossible to manage your application portfolio at scale and speed without implementing automation to reduce the resource overhead. This applies to all aspects of software delivery including detecting and remediating issues that impact the compliance and integrity of your systems.

During the build stage, automation could be deployed to detect potential issues from within the developer's IDE itself even before they are ready to compile. Binary analysis can performed automatically against a given set of known threats when producing release artifacts.

In the run stage, an enterprise can define their compliance standards as a set of testable rules that be can exercised when change is detected across the platform. Logs can be harvested and profiled to identify potentially malicious attacks. There is little benefit in simply harvesting logs without having the ability to analyse at scale and alert where necessary. Having elastic infrastructure that scales automatically when systems are under DDoS attacks allows enterprises to sustain service until the attack eventually subsides.

There still remains the need to manually penetration test your systems, but having the ability to deliver change more often, more quickly will depend on automation to manage those changes in between larger, fundamental changes to your systems. When there is a significant functional change, the need for manual testing, consultation and analysis is still required.

Access management or, more accurately, access lag - where you have expensive resource waiting for access to systems - is a burden on delivery teams. Access management should be automated for both granting and revoking access. Enterprises have approval workflows that need to be adhered to but this should be completely automated via workflow. Federate your services with centralised identity management systems but provide a self-service request and approval model. Federation reduces the proliferation of identity stores across a number of services and enables a centralised account compliance function. This must also not be a hindrance to people trying to do something useful while waiting for access.

Changing threat models

DevOps has enabled organisations to deliver innovative change more quickly and maintain competitive advantage. In doing so, the threat model has changed considerably within enterprises that adopt these practices. Hopefully the above considerations will help you on your journey to delivering better software more quickly whilst still maintaining compliance and integrity of your systems.

---

Considering DevOps, eh?

It’s enough of a challenge to implement DevOps successfully in an enterprise in the first place, without the drag of traditional vendors as an additional issue to deal with. Many enterprises want DevOps ideals and ways of working, but both enterprises and vendors need a huge shift in mindset from vendor to partner if they are to achieve it.

Check out our blog 'Doing DevOps? You Need A Partner, Not A Vendor' for some industry insights on how to get the most out of your vendor relationships when moving to Dev(Sec)Ops!

![Save Up to 10% on Your AWS Costs by Automatically Managing Instances Using AWS Lambda [Includes Demo]](https://cdn.sanity.io/images/hgftikht/production/2796769dd6137dd1c53291910f5f7457bdc1bfcb-690x365.png?w=630&h=365&fit=crop)