How to Engineer for Real Reliability Using Dickerson’s Hierarchy

Reliability is consistently under appreciated not just in technology, but life in general.

When something is reliable it is largely ignored. For engineers, reliability stops us getting to sleep, but it also wakes us up at 2am.

But reliability goes beyond the red dashboards of doom. As a business, you can lose time, reputation and, crucially, money when reliability becomes an issue. And retrospectively trying to resolve problematic areas of your application or infrastructure is expensive and is usually too little, too late.

Why Reliability Matters

Consider reputation, for example. We’ve all seen the unhappy customer that sends a tweet when they don’t get the service they expect. That usually has (relatively small) financial implications but consider this: can an organisation reasonably expect to hire (and retain) top talent if word gets about that their systems are a series of fires that need putting out?

Suddenly, this isn’t just about customers and the financial impact that reputational damage can cause. Recruitment and hiring will also suffer because, as technologists, job satisfaction comes from being able to innovate and deliver features that prove to be popular and well received.

By making reliability an afterthought at the expense of getting that new feature out quickly, teams embark on a journey of late nights, rework and demotivated engineers starved of the freedom to innovate. Tech debt grows and before you know it, that shiny new feature that was supposed to make the company millions has ended up costing more than it made, and the best engineers spend their time performing surgery just so they don’t get the dreaded call at 2am.

So, as a Product Owner how do you ensure that you’re getting the most business value out of your dev team by building a reliable base for the bells and whistles they love to work on?

How to Engineer for Real Reliability

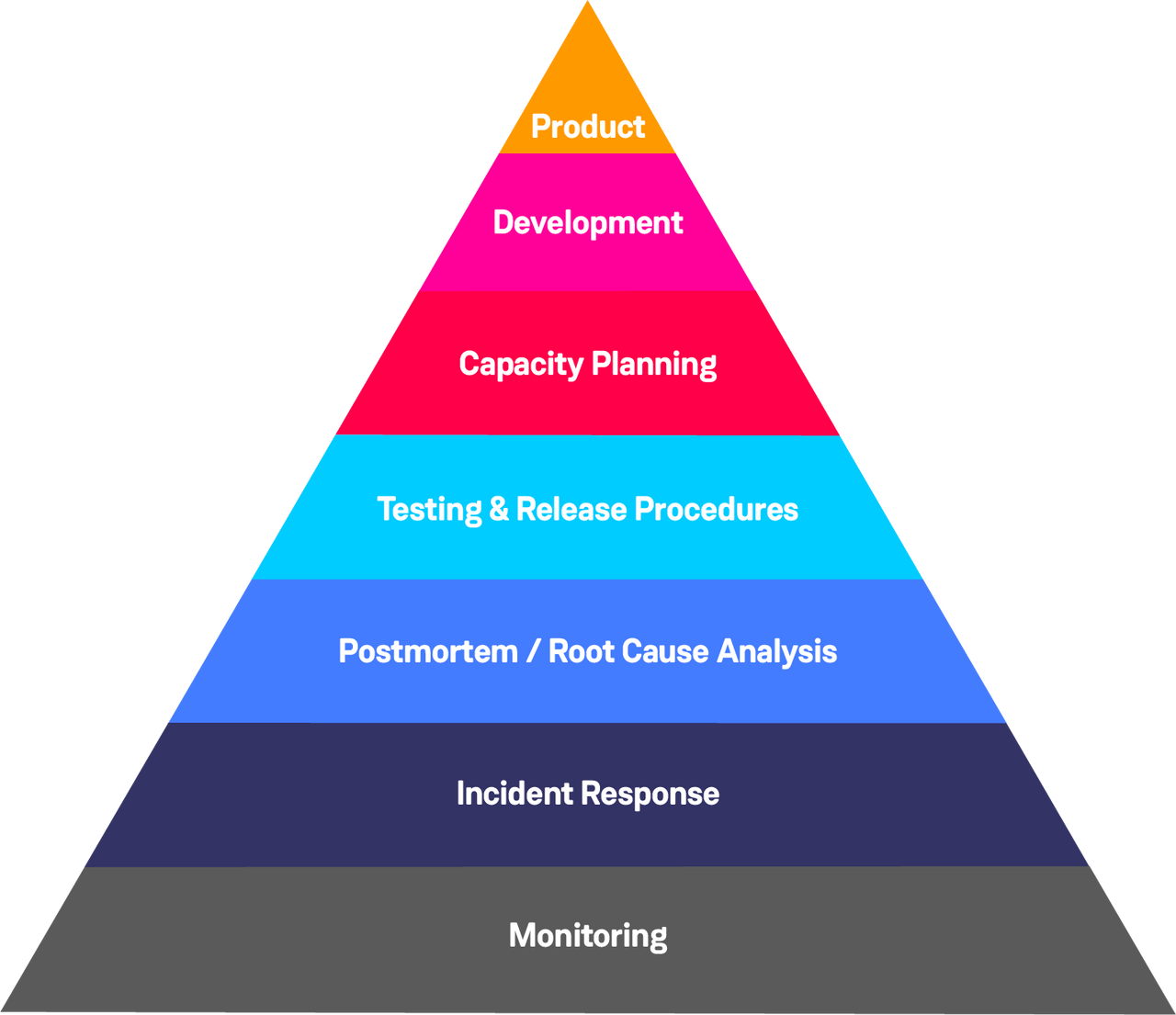

I’ve found Dickerson’s Hierarchy of Reliability to be a useful model for achieving reliability in your engineering endeavours. The pyramid below shows the different stages and aspects of software delivery that need to be addressed.

Based on Maslow’s hierarchy of needs, (something my colleague David Dyke talks about here) the premise is simple: before you move onto the next level of the pyramid you need to make sure that the previous level is solid (dare I say, reliable?!).

I’ll go through them one by one, addressing key ideas and concerns.

Monitoring

Monitoring can be comprised broadly of two parts: metrics and logs.

Metrics and logs give us the objective data we need to see how things are going. Operational awareness is key and we have to know what we’re monitoring. If we don’t, we lose sight of how well our complex applications are running, and the areas that require optimisation fly under the radar.

Knowing which data points are valuable is essential for operations teams so that they can configure appropriate alert rules and act upon them.

Alerts should be for exceptions only. Generating alerts to let us know “everything is ok” is a bad idea, not just for our mailbox size but because we become desensitised to those alerts and are more likely to ignore them.

I like the term ‘actionable alerts’ because it accurately describes their intended purpose. I don’t want a notification telling me that the last build was successful, just let me know when I need a human to intervene. Our systems aren’t going to be available 100% of the time so when there is a problem, we need to be able to identify it straight away.

Finally, perspective is key. It is crucial to understand that reliability is measured from the customer’s perspective, not the component’s. The severity of an incident depends on whether customers are having a problem. Is it a red alert, or it could be fixed later? If nobody notices, you can probably finish your game of golf first.

Once you’ve identified the data points and are using these to create your actionable alerts, you can move up the pyramid and define what happens when incidents arise.

Incident response

Our customers judge us on our ability to recover quickly. Incidents are generally feared and the temptation is to downplay them because we worry that, by talking about them, we face being reprimanded or that they will reflect poorly on our engineering ability.

As a result, information on current status can be more difficult to find than it should be to avoid any repercussions. If your Time To Recover is terrible and getting worse, this will in part be the reason!

Incidents tell us a lot about what is going on in our systems and (providing you have shored up your monitoring capability in the first level) are not to be feared but rather used as opportunities to improve and build on. From a people perspective, knowing who is involved will help us get an eyewitness account of what transpired and will aid us in answering key questions such as:

- When did we know about an event?

- How did we find out?

- Who is aware of it, and are they doing anything about it?

- How bad is it?

The foundation of a good incident response should include:

- A well-defined method for identifying who the members of the on-call team are and what current support rotation is, so that we can quickly find out who is available to respond

- Clearly defined roles and responsibilities to help us get to work as quickly as possible. Your First Responder will acknowledge the incident within SLA, an Incident Commander will be the single point of contact and your SME will be on hand to oversee the technical activities undertaken to resolve the problem.

These are just a few examples.

The rise of collaboration tools like Slack means it is much easier for us to understand minute by minute what is going on during an incident. Creating a space or channel specifically for an incident and encouraging the on-call engineers to communicate throughout the incident acts as an activity log that can be used to educate us about what went wrong, and why.

‘Postmortem’/Root Cause Analysis

When an incident does occur, the goal should always be to learn from failure.

The key to removing the fear factor around incidents is to create a safe space when conducting a post-incident review. Focus not on pointing the finger, but on how the technology and process failed the individual involved. Turn “who did this?”, into “what did our monitoring not give the person in order to do their job?” or “what part of the automated process made it super easy to delete an entire environment at 2am?”

If post-incident reviews consist of shouting and looking for a scapegoat, then engineers won’t engage and won’t speak up and provide that crucial piece of information that could prevent it happening again. I once heard someone say “You can’t fire your way to reliability” and when all is said and done, human error is usually a symptom of a much larger issue.

And please, please, please, drop the term ‘post-mortem’. We want to learn more about an incident than just the cause, and ‘post-mortem’ not only suggests that our interest is limited to what caused it, but that the broken area of the system is permanently unrecoverable.

Identifying where process failures occur helps us gather information that can be used to educate the engineers who can build checks into pipelines and automated processes. Moving these checks up the pyramid ensures that what we’ve learnt from our post-incident reviews makes it into code, and reduces the chance that we’ll see a repeat of these in production, by testing them before a release is promoted.

My colleague Simon Copsey also just posted a great blog on how to use systems thinking to uncover the root cause of organisation issues. Well worth a look!

Test and Release Procedures

By now, most of us are working in an agile team of some kind: maybe sprints or deploying incremental change in small pieces that are simple to deploy. But as we know, even the smallest pieces can cause unexpected issues if the correct testing procedures are not in place. Make sure you have good unit tests in place to provide quick feedback and allow us to fix issues before they make it to production.

Integrating our build pipelines with work tracking tools is also helpful. It enables us to automate the creation of work items based on the output of the testing stage. Built-in testing is also crucial in creating valuable, fit for purpose pipelines; developers who spend less time running manual tests have more time to work on those items which add real business value, such as new features, which enable businesses to stay ahead of the competition. Manual testing has its place, but by making sure that we automate where possible we can ensure our release process meets its goals.

A good release process has the following characteristics:

- It reduces stress and increases reliability: high job satisfaction and positive customer impact are the result of reliable deployments

- Allows us to fix issues faster: who wants to wait two months for that spelling correction?

- It reduces time to market: delivers quickly on innovation

A good rule of thumb is to automate as many of the smaller, cheaper tests as possible. Manual testing is expensive so try to keep the number of these as low as possible.

Automated testing not only helps ensure that our applications function as our customers expect, but that the platform for those applications can handle the additional functionality that comes with every release. If your application under-performs during a testing cycle, it’s a good indication that something is wrong within the application, or that you need to review the scale of the supporting infrastructure.

Capacity Planning

Capacity planning is something that everyone does, but very often badly.

Good capacity planning involves identifying points of failure. By being aware of resource limits and understanding current utilisation, we can better plan for increases in traffic in reaction to an event such as a new product, or something seasonal like Black Friday.

Coincidentally, reliability is a secondary benefit of scalability. Scalability is a direct result of good capacity planning and as such is no longer the sole responsibility of the ops team. Capacity planning is a cross-team activity and regular reviews of an application and its architecture are beneficial in staying on top of scalability.

Finally, by designing an appropriate monitoring strategy we can get a better understanding of whether a bug in the system is causing an unnecessary spike in resource consumption or causing our system to become inefficient over time. It is also important to consider when to scale back in should utilisation drop during quiet periods. How can you leverage the benefits of cost-effective cloud computing if you focus on the peaks and not the troughs?

Developers need to understand the requirements of the underlying platform in order to ensure that what they design and build is appropriate, and that the key areas of an application publish enough valuable information about what is going on.

Development

Developing for reliability can be a chicken and egg situation.

Developers ask how they can design for reliability without the metrics provided by the run teams, and the run teams ask how they’re expected to provide those metrics and create actionable alerts when the applications don’t make the data available to them.

Unfortunately, it’s not quite as simple as writing a bunch of ‘try...catch’ statements. We have to ask ourselves:

- Is the tool we need worth building or does an off-the-shelf product meet our requirements?

- Do we know how this is being done elsewhere?

There’s always the temptation to ‘roll your own’ solution to a problem, but the chances are that a hundred other companies have overcome the same challenge. Some of those popular off-the-shelf products are popular for a reason! Don’t be afraid to network and attend conferences to hear about what other companies are doing.

Sometimes we stifle our ability to innovate by spending time building tools that already exist, or that solve a problem which is really just a pet peeve, rather than one which is felt across the engineering organisation.

Most of us want to build The Shiny Thing, but have we invested enough time in the lower layers of the pyramid so that we have the time to spend on building those eye-catching features that make our product stand out from the rest?

Product (or UX)

So now we get to the top of the pyramid: the product itself!

This is arguably the most important part because this is what our customers see. I said earlier that “reliability is measured from the customer’s perspective, not the component’s”, which might sound obvious, but this is very often lost in organisations where engineers often have zero visibility of what the customer experiences when using a product or service.

Take time to review what you’re building through the lens of the customer:

- Where are customers accessing your product from?

- When are they accessing it?

- How are they accessing it?

Understanding the application in the context of the user and feeding this into the engineering teams will ensure that engineers can use these as guiding principles when working up through the pyramid. This includes giving consideration to the delivery of an application or service. By adopting deployment strategies like canary releases or blue/green environments, you can control how much of your customer base receives an update (or how much they are impacted by an outage!).

Failure to understand one, or all of the above will impact each tier of the pyramid, which in the long term will impact reliability, reputation, and profitability.

Take the Time to Build a Solid Pyramid!

By taking the time to ensure that each tier of the pyramid meets the requirements of the teams that are building and operating the application (or platform) you provide a stable base that will free your engineers from the tyranny of unplanned work and late nights.

By building reliability into your definition of done, you promote engineering excellence which over time will become second nature to engineers writing the code and completing user stories. Dangling the carrot of innovation in front of engineers is a powerful motivation tool, because none of us leap out of bed in the morning to dig through log files.

If you don’t heed the warnings you’ll end up with unhappy engineers, unhappy shareholders and a disgruntled customer base. And those engineers? Well, they’ll start to look for a new job that will give them satisfaction and quench their thirst to do something valuable.