Kubernetes Is Hard: Why EKS Makes It Easier for Network and Security Architects

Running a Kubernetes cluster today is hard. You first need to decide which cloud (or datacentre) you are going to deploy it to, then meticulously select appropriate network, storage, user and logging integrations.

If your requirements lie somewhere outside the norm, good luck finding a tool to wrap that up for you!

If you’re running in Google Cloud, you already have a battle hardened, production ready Kubernetes service available to you in GKE, but according to the Cloud Native Computing Foundation, 63% of Kubernetes workloads currently sit in AWS. In response, AWS have announced its plans to provide Kubernetes as a Managed Service with EKS. If you want to find out more about the EKS and Fargate announcement, check out Carlos’s blog post here.

Today, we’ll have a look at why the Kubernetes network stack is overly complex, how AWS’s VPC container networking interface (CNI) simplifies the stack, and how it enables microsegmentation across security groups.

By the way...if you find this post interesting and would like to hear more, you can catch Rory at AWS Summit in Sydney on Wednesday 10 April at 10:45am giving a 15 minute talk on Secure Kubernetes in AWS!

Beware: The Kubernetes Network Stack!

The most contentious point for building a Kubernetes cluster is the network stack. There are a tonne of providers available and if you have never deployed K8s before, it can be pretty daunting!

Kubernetes handles networking using a different approach to the normal ‘Docker way’ of doing things. Docker uses port-forwarding from the host to the underlying container using virtual bridge network. This means that coordinating ports across multiple devices is a difficult thing to do at scale and exposes users to cluster-level issues outside of their control.

Kubernetes on the other hand imposes the following requirements on its network implementation:

- All Pods should get their own IP Address, and the container should be able to see itself as the same IP that others see it

- All containers can communicate with each other without NAT

- All nodes can communicate with all containers (and vice-versa) without NAT

Kubernetes provide a plugin specification called the Container Networking Interface (CNI) to integrate the platform with the underlying network infrastructure. As long as the above requirements are met, vendors are free to implement the network stack as desired, and typically use overlay networks in order to support multi-subnet, multi-az clusters.

What Is an Overlay Network?

An overlay network is a type of networking whereby administrators create multiple atomic and discrete virtualized network layers on top of the physical infrastructure. It decouples network services from the underlying infrastructure by leveraging encapsulation techniques, such as NVGRE or VXLan to wrap one packet inside another packet.

A common example of an overlay network is the AWS Virtual Private Cloud, which allows Amazon to run thousands of private, atomic networks across across millions of devices, spanning multiple sites and regions.

In the context of Kubernetes, an overlay network allows Pods in a Kubernetes cluster to communicate over multiple clusters in a separate IP range to the underlying VPC.

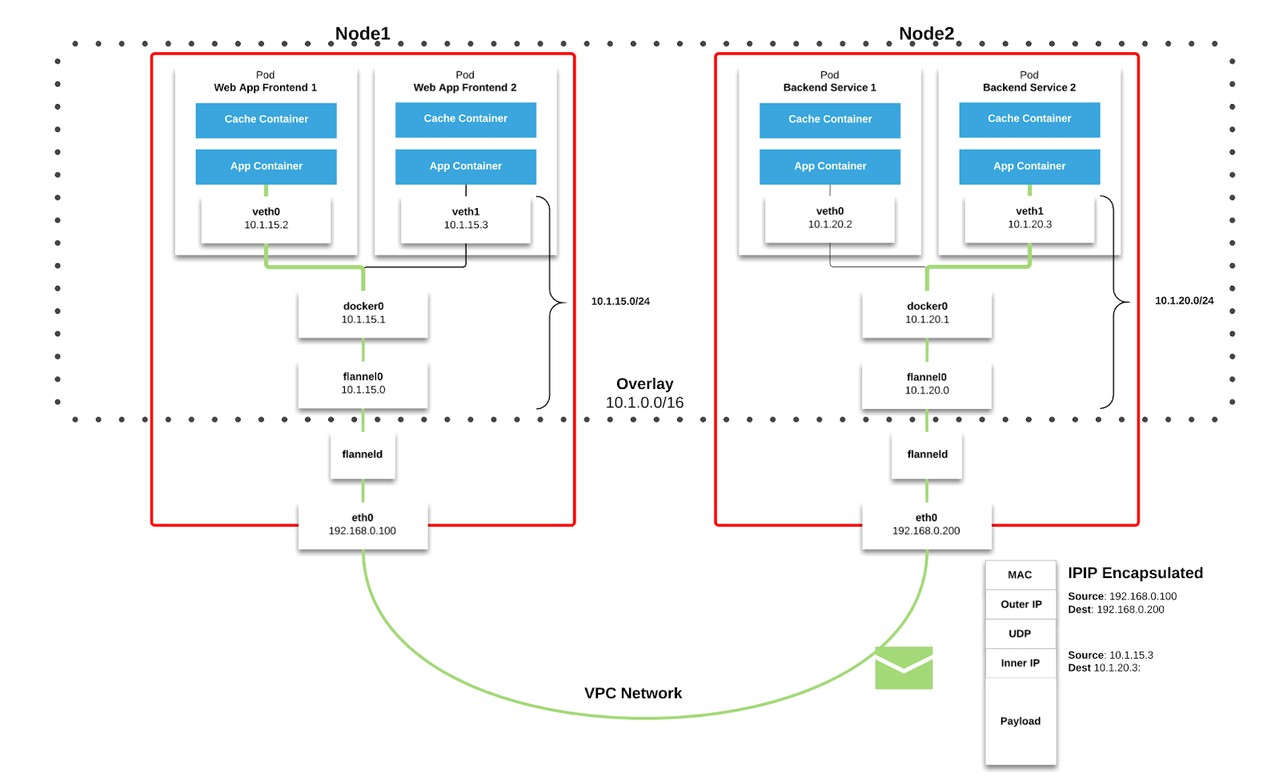

In the following example, we can see how overlay networks are implemented through the use of a popular CNI called Flannel. Assume the Pod Web App Frontend 1 which is located in Node1 wants to talk to the pod Backend Service 2 which is located in Node2.

Web App Frontend 1 creates an IP packet with source: 10.1.15.2 -> destination: 10.1.20.3. This packet will leave the virtual adapter (veth0) which is attached to that pod and go to the docker0 bridge. Docker0 will then consult it’s route table, where it sees that all endpoints outside of 10.1.15.0/24 sit on other hosts, and forwards it to the flannel0 endpoint accordingly.

The flannel0 bridge works with the flanneld process to manage a mapping of hosts to network zones in the Kubernetes KV Store, ETCD. In this case, it knows that 10.1.20.3 sits in on the host 192.168.0.200 and will wrap the packet in another packet with source: 192.168.0.100 -> destination 192.168.0.200.

The packet will then travel out through the VPC network to the destination host and hit the destinations flanneld. From there, it reverses the process above and travels to the destination pod.

And now remember that the VPC is also an overlay encapsulating the packet. That’s three network layers!

It’s complex as hell!

Not only that, running an overlay network in AWS doesn’t work.

Why not?

VPC Flow Logs don’t work: The underlying VPC has no context for the encapsulated overlay network that is running on top of it. VPC flow logs will only show traffic between the hosts in the Kubernetes cluster and makes troubleshooting any network related issues a pain.

Security Groups don’t work: Since the VPC has no context for the overlay network, it is unable to apply security policies to the individual pods, instead only applying them to the Kubernetes cluster itself. This means you need to run, manage and maintain two sets of network policy controls.

I have another thing I can break: By implementing an overlay network, I have created another fault domain for my application stack. The more fault domains that I need to worry about, the more likely I am going to be woken up at 3am with a problem that affects every single application in the cluster.

I don’t want to manage this stuff anymore: The reason we are moving to the cloud is to shift the accountability away from me and to a company that can scale these services larger, better, faster and more efficiently than I could dream to. By creating another network stack for me to manage, I feel like I’m stepping back into the dark ages of on-premise networks.

But don’t worry! AWS to the rescue!

Rather than leverage one of the existing providers, AWS are building their own…and the results are pretty cool.

The AWS VPC CNI

AWS agrees that overlay networking is not the most ideal way to manage the networks for AWS EKS and has instead built their own CNI interface and has open sourced it to boot. While still very much in Alpha, we can see that the implementation is trying to solve some of the very frustrations we see with overlay networks.

It looks to achieve the following goals:

- Pod network connectivity performance must be similar to AWS VPC Network. That is, low latency, minimal jitter, high throughput and high availability

- Users must be able to express and enforce granular network policies and isolation comparable to those achievable with native EC2 networking and security groups

- Network operation must be simple and secure. Users must be able to apply existing AWS VPC networking and security best practices for building Kubernetes clusters over AWS VPC

- VPC Flow Logs must work

- VPC Routing Policies must work

- Pod Network Setup in Seconds

- Pods must be able to attach to the network in seconds

- Clusters should be able to scale to ~2000 nodes.

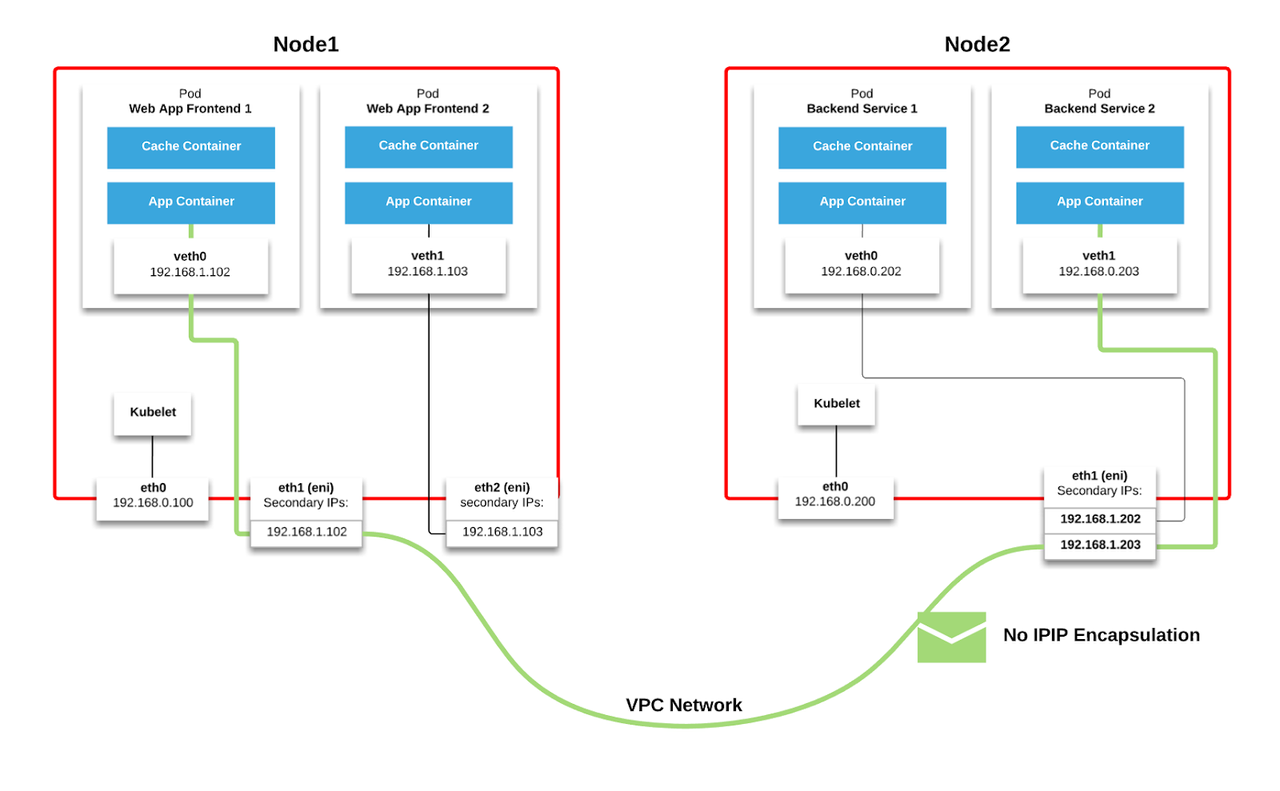

To achieve this outcome, AWS abuses its ability to attach multiple Elastic Network Interfaces (ENIs or vNICs) to a virtual machine, and associate multiple secondary IP addresses with each ENI. Each pod is then able to pick a free secondary IP address, assign it to the pod and allow it to communicate inbound/outbound over that interface.

If we use the same diagram as before, we can see that to route from A->B, we no longer need to worry about Kubernetes encapsulating the packet as all communications can take place using IP addresses in the VPC.

Network architects can breathe a sigh of relief: this significantly simplifies the stack!

Security Architects and Microsegmentation

Now onto what this means for our security architects!

The AWS VPC CNI means that we might soon see native microsegmentation using AWS Security Groups!

Microsegmentation is the ability to apply network security policies to virtual machines, and is a key control used to reduce the likelihood of east/west intrusions in the data centre by applying application contextual network policies, rather than simply having firewalls on the edge of the DMZ, Application and Database zones like the network designs of old. SDXCentral have a good article explaining microsegmentation further.

If you have been running in AWS, Google or NSX for a while, you would be pretty familiar with the concept of microsegmentation, even if you didn’t realise it!

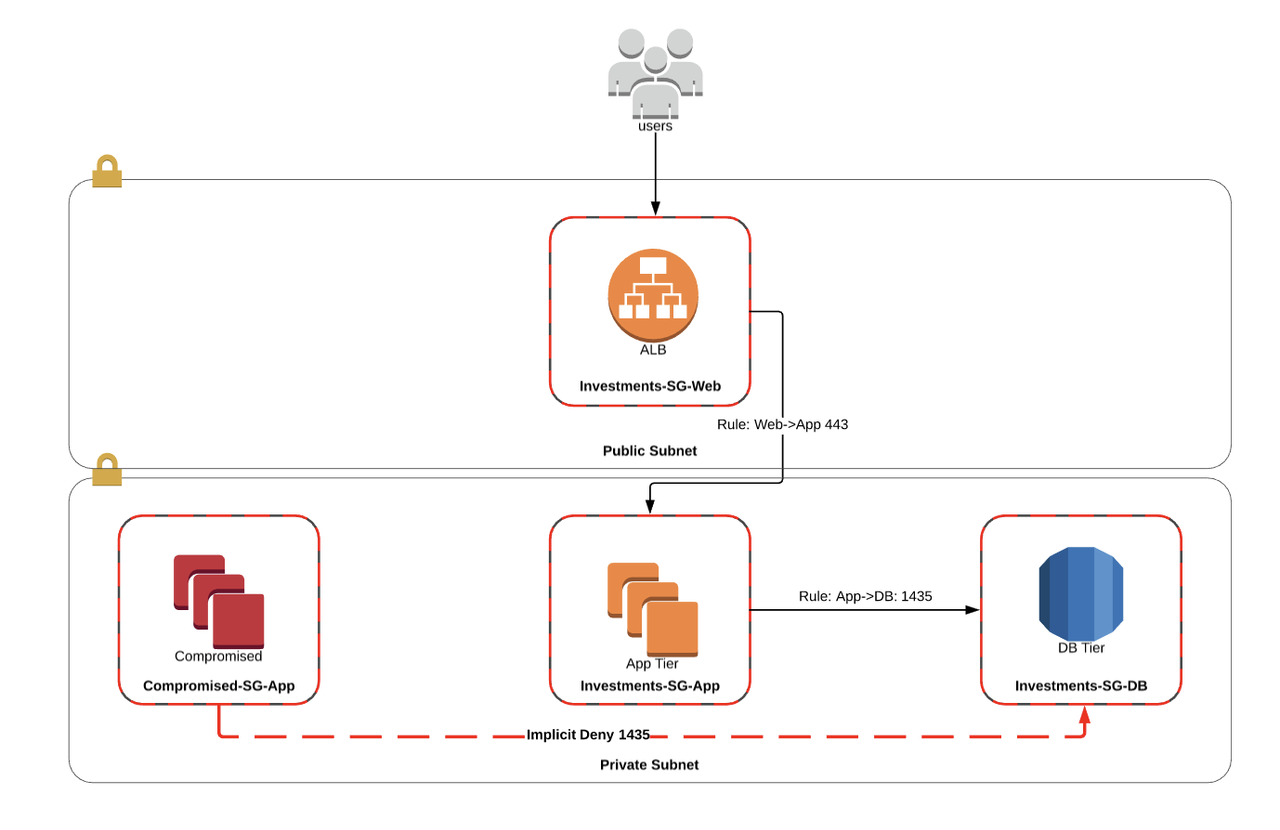

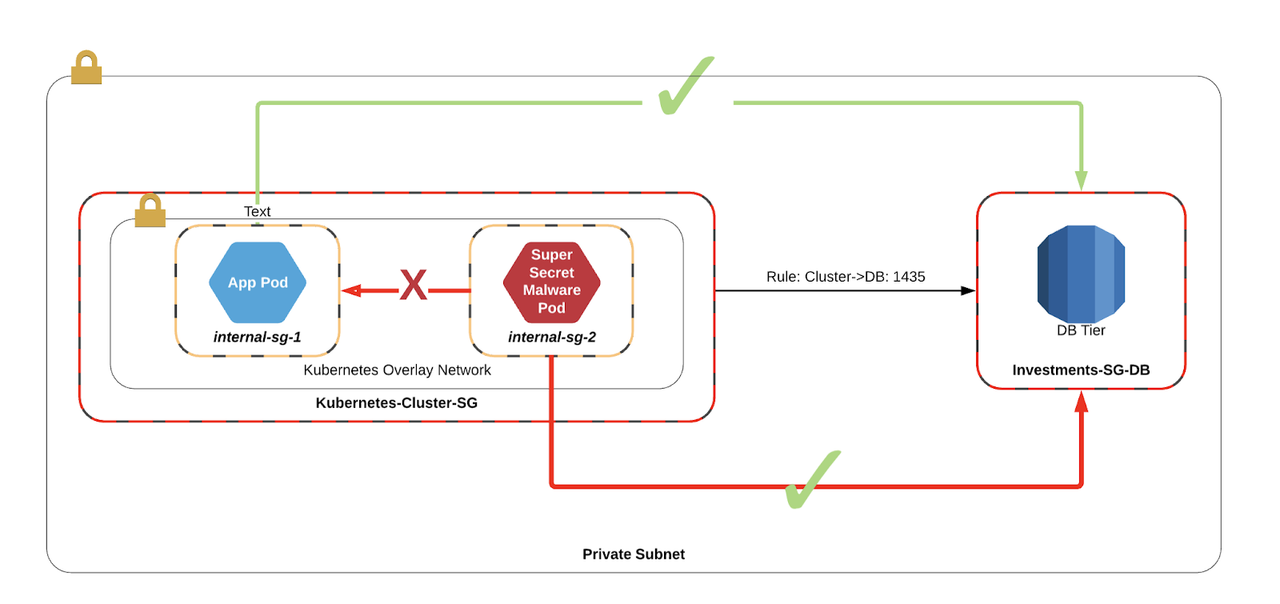

Below is a fairly typical example of microsegmentation in AWS.

In this example, we can see an extremely simple investments application that is split into three layers: a load balancing tier fronted by AWS ALB, an app tier running on EC2 and a database tier RDS. Each of these layers has its own security group that explicitly allows traffic flows between each tier and implicitly denies all other traffic.

In the situation that another workload has been compromised, we have a control in place that makes it impossible for workloads to escalate east/west to other services in the same subnet.

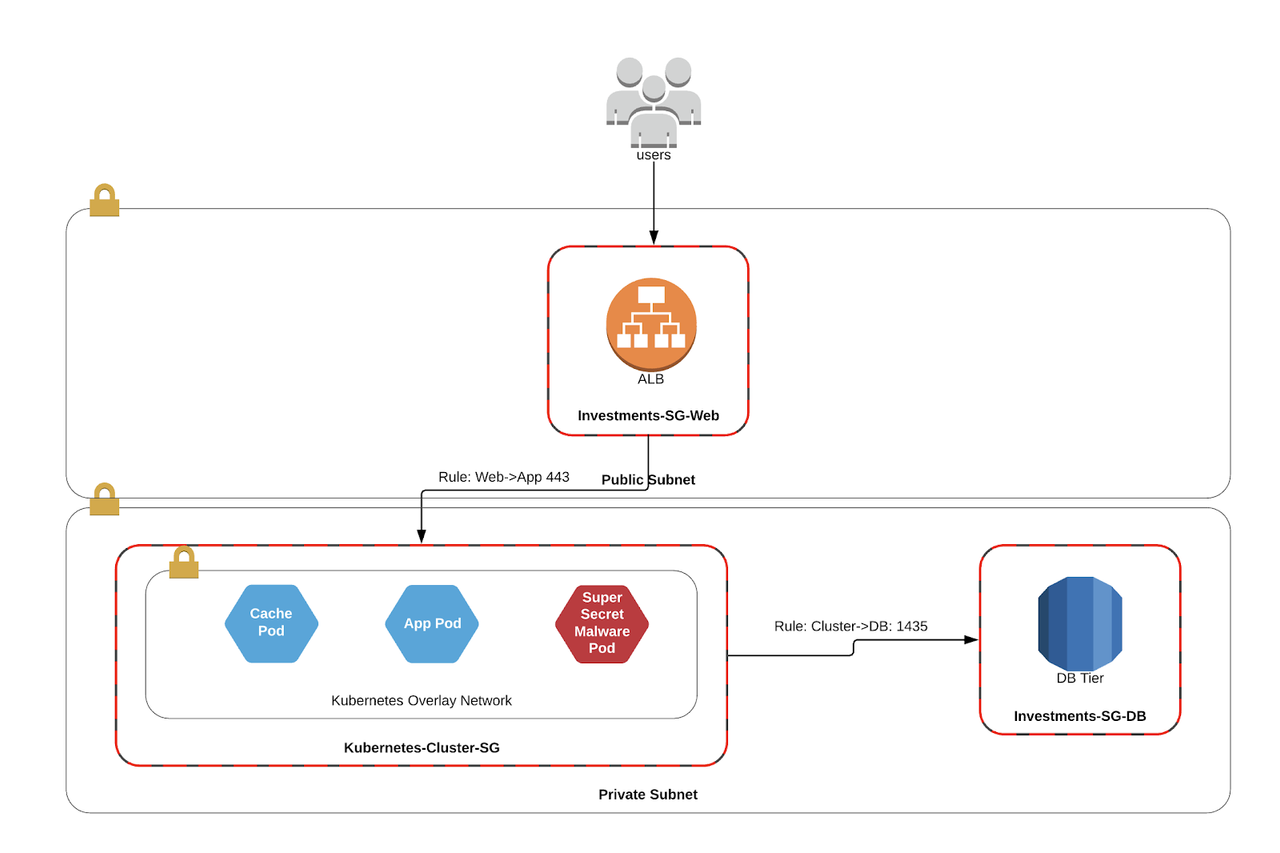

But what happens when you introduce Kubernetes into the mix?

In the old world of Calico/Weave/Flannel, the VPC had no context for the pods, and could only apply security groups to the hosts running the containers. In effect, this meant that any Pod could route to any service that has been exposed to the cluster. If one of those pods gets compromised, it could potentially escalate to a database of which it has access.

But I thought Kubernetes had nano segmentation?

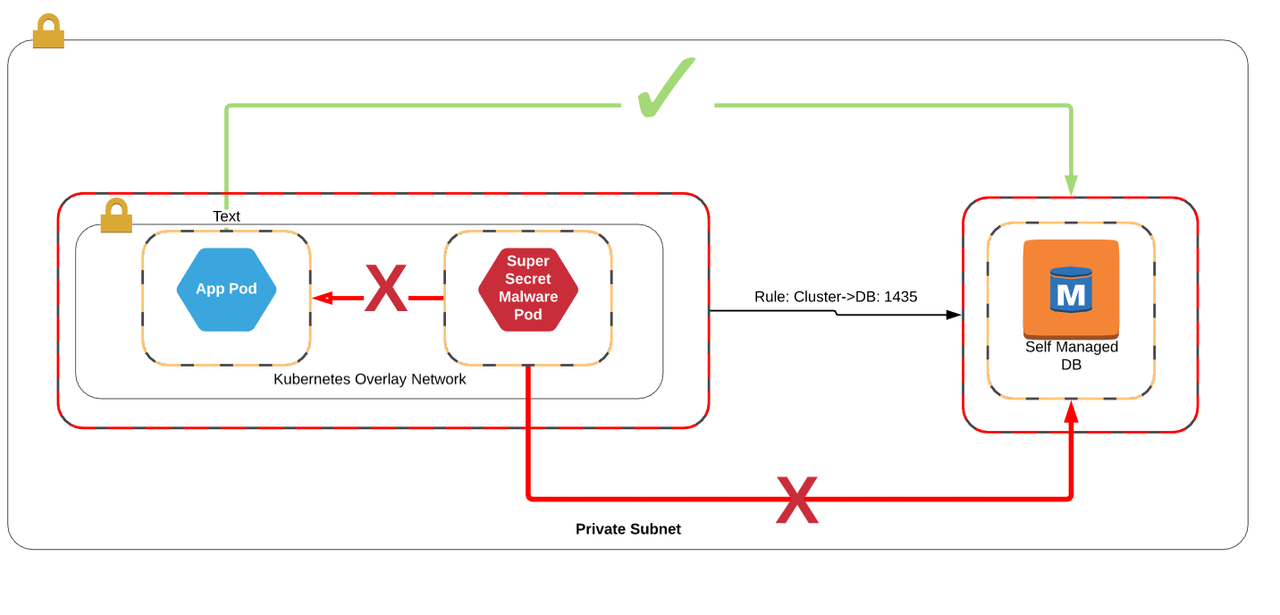

Kind of…there are a number of tools available to tackle the problem of network segmentation within the cluster. They are typically implemented using a mix of os packages, container side cars or docker daemon sets, but these add yet another layer of complexity to your deployment stack!

In most cases, these segmentation tools have no context for the world outside the cluster, meaning they can block east/west attacks within the cluster, but aren’t able to do alot to stop connections outbound to supporting services like database. In some cases, they may have a black/whitelisting capability for the pods, but maintaining these rules in two locations is tedious and ineffective.

But I heard of this tool that works inside and outside the cluster?

Some tools allow you to apply policies inside and outside of the cluster, but they work by installing agents on those virtual machines outside the cluster. This means that you can’t apply your segmentation policies to services like RDS which don’t give you access to the underlying host, and instead must run your own… which defeats the whole point of being in the cloud in the first place.

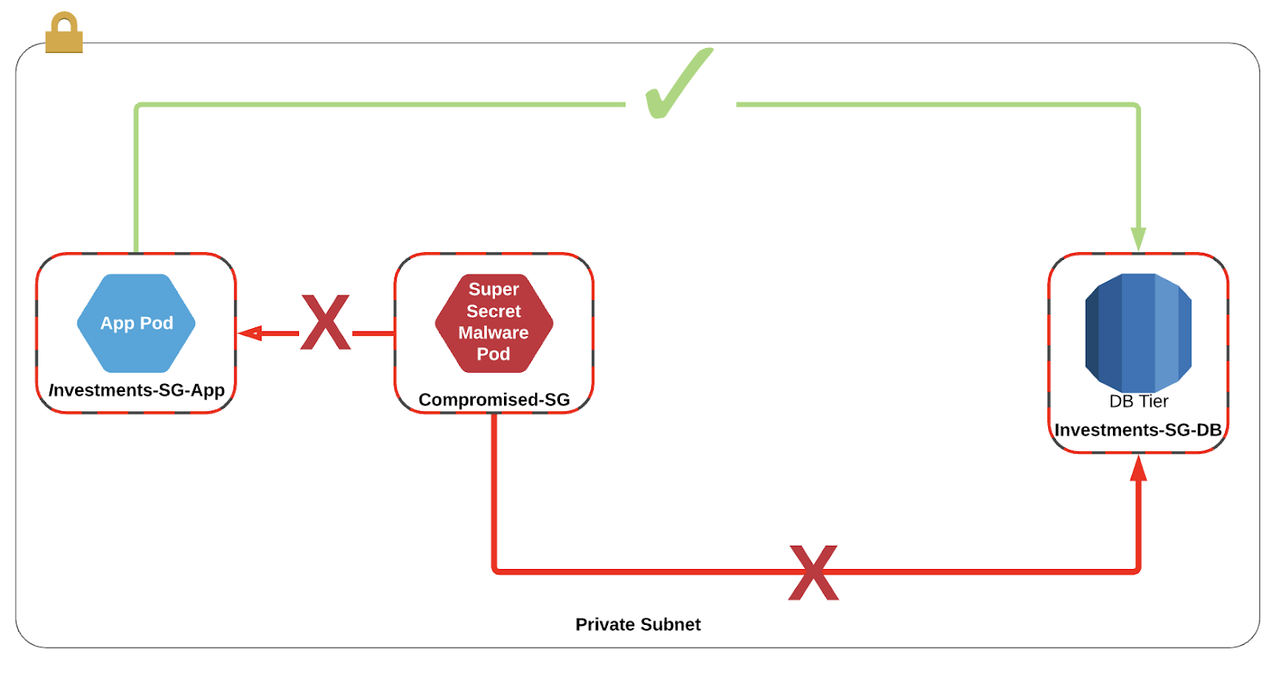

So what about EKS?

One of the goals of AWS’s CNI is to be able to apply Security Groups to pods the same way as every other VPC resource. On release, we should be able to apply Security Groups for microsegmentation inside and outside of the cluster!

When wouldn’t I use the AWS developed CNI?

Virtual Machines in AWS have a max limit on the number of Elastic Network Interfaces that can be attached to any single vm at any time. This number varies by instance type. This can be calculated as follows:

ENIs x Secondary ips per ENI = Max Pods per Node

If the application you are building requires extremely high density of pods to nodes, you would be better off using one of the other CNI solutions like Calico or Weave, or perhaps something like Hashicorp’s Nomad which has been proven to scale up to one million containers across 5k hosts.

Another issue with the AWS CNI is its inefficient use of IP addresses. In order to attach an IP address to a Pod as quickly as possible, the CNI pre-loads a number of IPs to the Node. If the cluster is deployed in a particularly small VPC, you may run out of addresses, even if they aren’t being used by real workloads.

Final Thoughts

For EKS to be a success in the AWS it needs to be properly integrated with the rest of the application ecosystem. By simplifying the network stack, the daunting barrier for entry in Kubernetes has been lowered, making it more accessible for new audiences, more secure for advanced audiences and better performance for those who need it.

I, for one, am really excited to see how this extends out into EKS Fargate and what happens when containers become a true compute primitive like EC2!

If you found this post interesting and would like to hear more, you can catch Rory at AWS Summit in Sydney on Wednesday 10 April at 10:45am giving a 15 minute talk on Secure Kubernetes in AWS.