Best Practices for MLOps and the Machine Learning Lifecycle

A successful machine learning (ML) project is about a lot more than just model development and deployment.

Machine learning is about the full lifecycle of data. It consists of a complex set of steps and a variety of skills, required to achieve actionable outcomes and deliver business value.

The level of complexity involved in the ML lifecycle is part of the reason why good practices and fully integrated tools are in their infancy, even in the present day. Other reasons include a lack of skills, poor scalability of models, and a lack of automation as data scientists often come from several different backgrounds and do not always follow best coding and DevOps practices. Furthermore, data scientists and engineers usually work in silos which results in poor collaboration across the teams.

That’s where MLOps comes into play, combining ML, DevOps and data engineering skills to manage the full ML lifecycle and using best practices to deliver business value in alignment with a company’s data strategy.

MLOps: Productionising AI the Right Way

MLOps is the process of operationalising data science and machine learning solutions using code and best practices that promote efficiency, speed, and robustness.

In other words, MLOps is about productionising better ML models faster and having the right culture in place that is data-driven and that applies DevOps practices to ML systems. It involves significant collaboration between data scientists, data and cloud engineers and builds upon executive support and iterative feedback by stakeholders. The adoption of these practices is indispensable for putting reliable machine learning projects into production.

BUT WHAT ARE THE BUSINESS INCENTIVES?

Companies that apply core practices for using AI see both higher revenue increases and greater cost decreases compared to other AI-adopting organisations, reveals a report by McKinsey. The former are high achievers that leverage AI to drive value across the organisation, mitigate risks associated with the technology, and train their workers to achieve AI solutions at a greater scale. This emphasises the potential value that can be gained from machine learning when it is implemented and applied well.

In the rapidly changing world of cloud infrastructures, data platforms and data science algorithms, the tools and best practices that enable the production of reproducible, traceable, verifiable machine learning are gaining a lot of attention (e.g. MLflow, Amazon SageMaker Studio, Kubeflow). Such tools aim to offer end-to-end solutions for developing, tuning, deploying, and more importantly tracking and versioning ML models. This creates visibility over the used resources for easier reproducibility and enables engineers to set up pipelines for logging and monitoring the performance of a model, then re-training when appropriate. Essentially, these tools allow the user to put core MLOps methodologies into practice.

But more about ML tools in Part 2 of this blog, coming soon!

First, let’s take a look at the ML lifecycle.

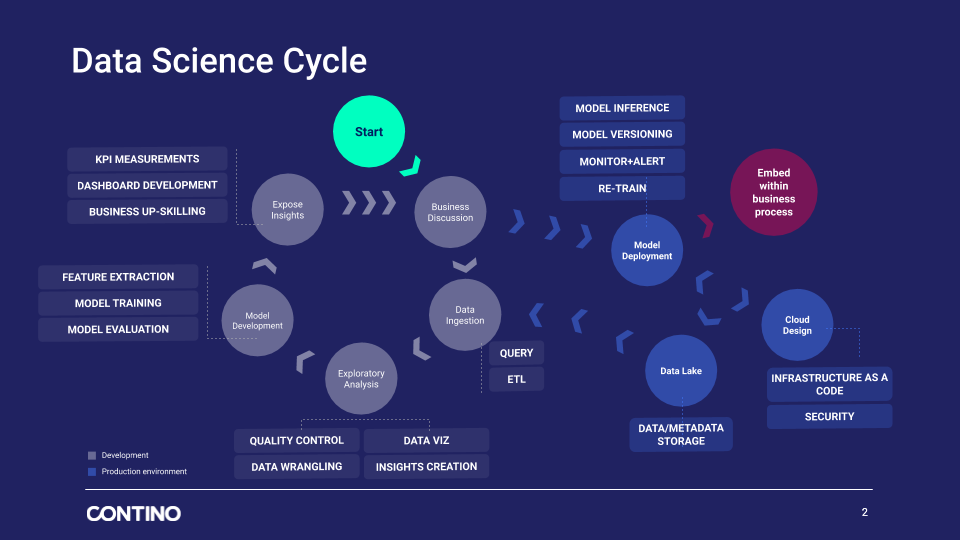

A Closer Look at the Machine Learning Lifecycle

A thorough ML lifecycle design accounts for all steps from cloud architecture through to model development and deployment to stakeholder communication and upskilling. An example of this is presented in the image below. The high-level elements of the model are detailed underneath.

CLOUD ARCHITECTURE DESIGN

ML projects are not based on pure data science anymore. Data and cloud engineering skills are becoming increasingly important for managing the full ML lifecycle.

We live in the age of digital transformation when more and more enterprise organisations are migrating to the cloud and hosting their data in dedicated data lakes. This is an inevitable step in building optimised, efficient, secure data platforms and ML projects.

Most cloud platforms these days provide their own data science tools for development purposes (e.g., AWS SageMaker, Databricks, Google Cloud AI Platform Pipelines, etc.). To account for all the steps involved in an ML pipeline (data storage, data ingestion, model development, deployment, visualisation, monitoring, etc.) in a secure, high-performing, resilient manner, cloud engineering skills and MLOps practices are essential.

It’s important to remember that ML code itself is only a tiny fraction of the full lifecycle, where a series of tasks have to be orchestrated in a well-architected manner, i.e., setting up the serving infrastructure, data collection, data auditing, model monitoring, etc.

Infrastructure as code is the basis of reproducible ML environments through peer-reviewed, secure pipelines. Model training and testing should be carried out with a mindset of continuous integration and development (CICD). Security should be built in every part of the pipeline, securing both the data (e.g., PII) and code.

While the data scientist is responsible for developing the ML model and delivering analytic insights, data/cloud engineers are better equipped to set up the ML pipeline and ensure continuous deployment of the projects.

The Definitive Guide to Cloud Migration in the Enterprise: Here Be Dragons!

The road to cloud migration is full of DRAGONS...

We explore the four dragons that are most likely to burn your transformation to the ground and the tools to slay them!

DATA INGESTION AND CLEANING

This step combines data engineering and data science knowledge, with the goal of assuring the quality control, security, and integrity of the data. Data cleaning and transformation can involve various steps depending on the project. Some of the more obvious ones are: dealing with missing values, duplicate management, column selection, filtering, text cleaning, aggregation etc. This step can have a huge impact on the model run time, as it involves a lot of data processing.

DATA EXPLORATION AND MODEL DEVELOPMENT

This phase involves steps such as data exploration and insights creation, feature engineering, training and testing and model optimisation. Data science projects largely benefit from the data exploration phase as it can lead to a better understanding of underlying trends in the data, it can help to pinpoint biases, and it fosters the creation of more accurate models by focusing on relevant data features.

EXPOSE INSIGHTS TO STAKEHOLDERS

According to a survey by the International Data Corporation, most AI projects don’t go into production because expectations are not well communicated with the business, or due to the lack of skills necessary for maintaining these models in production. This highlights the importance of better and iterative communication between data teams and the business to help develop innovative AI strategies and solutions, and the need to upskill employees in order for organisations to stay competitive.

Combining data science expertise with stakeholder business understanding is particularly relevant for achieving tailored and actionable outcomes, and for creating a valuable experience both for the data scientist and for the business. Data analysis and insight creation are the first step on any business’s path to data-driven decision making, and model explainability is crucial for gaining the trust of C-suite executives and stakeholders.

Communication with stakeholders, setting clear expectations and upskilling employees are all key elements in delivering business value through productionised ML solutions, while also helping organisations staying competitive in the era of digital disruptions. Best practices enabled by MLOps is the key to achieve these goals.

VISUALISATION

This step involves the transformation of insights and ML predictions into a consumable format for end users.

Even today, organisations often work and do their analysis in spreadsheets, and data often has to be retrieved from several systems with long wait times. As a result, information retrieval for answering even simple questions or measuring basic KPI-s can be very cumbersome.

Data lakes and the adoption of interactive dashboard visualisations (Power BI, AWS QuickSight, Plotly Dash) over static reporting make data access more direct and efficient, and therefore represents a necessary step in modern digital transformation processes. Dashboards can be tailored to customer needs, enabling marketing and sales operations through ML predictions.

DEPLOYMENT

The model is now ready to be made available to end-users and stakeholders, and integrated with business processes for data-driven decision making. However, there are several steps that remain including model deployment and validation, artefact creation, monitoring, performance engineering and operation.

Machine learning projects create a variety of artefacts as one has to deal with data, model and code versioning, and track the metrics of model performance after deployment. As such, artefact creation and management is a necessary by-product of machine learning projects when creating reproducible ML pipelines.

After deployment, monitoring of the model performance and data auditing can ensure a smooth operation of the ML lifecycle – the aim being to create self-healing environments where models can re-train when needed without human intervention.

Why Do We Need MLOps?

Many companies have spent years collecting data and now is the time to leverage the full potential of this data using AI as a competitive differentiator.

Machine learning can help deploy solutions that unlock previously untapped sources of revenue, save time and reduce cost spent on resources by creating more efficient workflows, leveraging data analytics for decision making and creating better customer experience. Automation through MLOps enables faster go-to-market times and reduces operational cost, allowing companies to be more agile and strategic in their decisions.

It is important to note that AI doesn’t come without risks. Privacy violations and bias are undesired outcomes and organisations should plan ahead to mitigate such risks. Creating ethical and secure AI platforms play an important role in taking action against these risks.

In conclusion, organisations that design their projects with MLOps concepts in mind will benefit from more reliable data platforms and secure environments that are able to recover from failures. All of which makes it easier to focus on extracting the insights and value that ML creates and positions the business to compete more effectively in the market.