How to Secure Serverless Applications Using the Principle of Least Privilege

Serverless is a really interesting concept—it allows you to build scalable applications while simultaneously reducing your costs and decreasing your overheads, in terms of your management overhead.

I've worked with serverless applications for various different clients during my time at Contino—using different architectures and different services within AWS.

In this blog, we’re going to look at using the principle of least privilege, best practices, the benefits of achieving least privilege and more.

What Is the Principle of Least Privilege?

The starting point of this is understanding what the principle of least privilege is. I've got a couple of definitions as an example here:

“The principle that users and programs should only have the necessary privileges to complete their tasks.” - NIST

“Least privilege is a principle of granting only the permissions required to complete a task.” - AWS

The key takeaway is that entities, whether those entities are people or applications, should only have the permissions and the access that they require to carry out their function.

In the same way, when you’re working in an office, you wouldn't have the username and password credentials to everyone's laptop in the office. You would only have those credentials to your laptop because you don't need to access other people's laptops to do the work. So you shouldn't have access—that's the least privilege.

And this is all very well, but what do cloud providers say about how you can use and implement least privilege when working on their platforms?

Cloud Provider Best Practices

After reviewing a lot of literature from AWS, GCP, Azure, and from well known security organisations, I've taken a few examples of what they say about best practice:

- “Implement the principle of least privilege and enforce separation of duties with appropriate authorization for each interaction with your AWS resources.” - AWS Security Pillar Whitepaper

- “Start with a minimum set of permissions and grant additional permissions as necessary” - AWS Security Best Practices in IAM

- “..grant the most limited predefined roles or custom roles that meet your needs” - GCP Using IAM Securely

- “Check that your code always runs with least privileges required to perform its task.” - Azure Functions and Serverless Platform Security

What I found was that saying you should adhere to least privilege is one thing, but guidance can be lacking on the best way to achieve that. Another thing I found was that specific guidelines on least privilege tend to focus more on the people using the cloud rather than applications running on the cloud. And the guidance can be somewhat vague.

The quote from Azure Functions and Serverless Platform Security really sums it up—check that your code always runs with least privileges required to perform its task.

That's all very well, but it doesn't really help you to implement least privilege at all. So that's why I think it's worth doing a bit more of a deep dive into this subject and looking at how you can actually better achieve least privilege.

What Are the Benefits of Achieving Least Privilege?

1 More Difficult to Successfully Attack a System

First of all, a system that implements least privilege well is simply harder to attack and cause damage to. If you've got a resource in the cloud that allows significant access to it, then it's much easier to misuse that resource, to carry out an attack.

2. Blast Radius Reduced in the Event of a Successful Attack

Secondly, the blast radius is reduced, so if there is a successful attack, the amount of damage the attack can do is reduced. In a serverless application, or a serverless system, you typically have many different resources that are part of that application or system. And if those components or resources have very open permissions that allow a lot of access to them, they typically need to communicate with one another. So it allows you an entry point into that system or that application. This means that the act of compromising, or misusing one of those resources potentially gives you an entry route to be able to misuse other resources, e.g. a database.

3. Reduced Risk of Financial or Reputational Damage for an Enterprise

The third point here is that when customer data confidentiality is broken, achieving least privilege to a good extent can help to reduce the risk of financial reputational damage for an enterprise. So when you hear in the news that a company had a data breach and lost a lot of their customers' data, what typically happens is a couple of things:

1. Loss of Money

Typically, they'll get a fine, which of course will hurt the company financially.

2. Loss of Trust

But possibly the more important point for the company is that it often leads to a loss of trust between the company and their customers. Their customers, and even potential customers may not trust that company to hold their data and they may switch to a competitor.

So, if as an organisation, you can show that you protect your customers’ data well, you'll build that trust and in turn gain more customers.

4. Defines a Clear Standard to Develop to

My final point is an engineering focus—you're giving a clear standard to people who build your applications.

There's no confusion over what the standards that you need to develop are, and this allows developers and engineers to focus on the functional task at hand. Rather than having unclear requirements, and then having several meetings to discuss those requirements, which delays the building of the actual application.

Why Is Least Privilege Hard(er) to Achieve in Serverless Applications?

The first thing to call out is that you can never expect to achieve 100% least privilege. So you should never hope to define all of the conditions under which access should be granted. It's quite difficult to actually measure the extent to which you are achieving least privilege. What metric should you use? And how do you decide what is good enough?

Both of these things make it difficult to determine the level of effort that you should put in. And since you can't achieve a hundred percent perfection in least privilege, these become very difficult questions.

You can also try to go too far, and the effort may actually outweigh the benefits once you reach a certain level, if you keep trying to lock things down for tiny edge cases for example.

The second point to consider, and this is very important, is that serverless applications are generally made up of many different small components. For example, if you're talking about the AWS world, you're talking about APIs and Lambda functions amongst many other things.

If you take a server based application that may be just something running on a VM, or an EC2 instance in the AWS world, you might break that down into many different Lambda functions, SQS queues, and API Gateways endpoints, amongst other things. There are then a lot of different Lambda functions, which then need to communicate with one another. Plus there are data sources and data stores you've got to think about—everything's a lot more decomposed.

The third point is that developing applications with bad security is much easier than developing applications with good security. If you don't have to worry about things like encryption and permissions and what needs to access what thing, it becomes very easy. Of course, this isn’t best practice but in my experience, when developers have time constraints, they start to cut corners. And this can be one of those instances.

The last point here is that the nonfunctional requirements, such as security, typically get less attention than the actual functional requirements of tickets that engineers are working on. So when you're working on a ticket, as a developer or an engineer, you focus more on what the functionality is that you need to build as part of that ticket, rather than on “does this thing need to be encrypted?” or its availability, performance etc.

Example: AWS DynamoDB

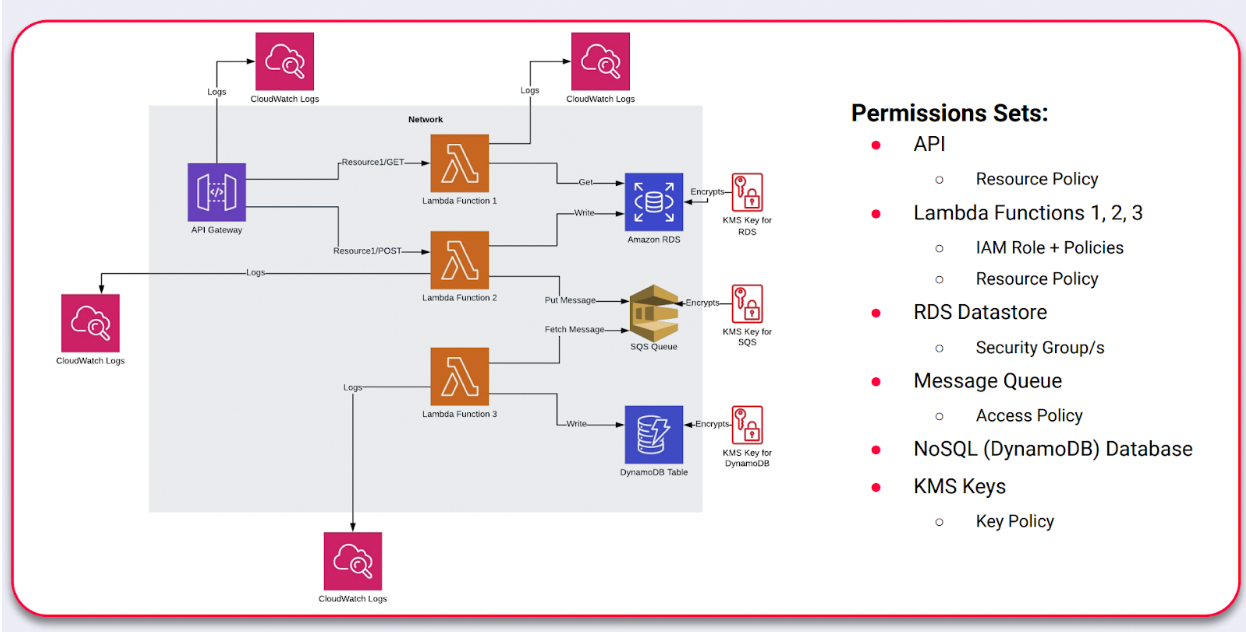

Serverless applications are typically broken down to many different components and each of those components needs a permission set. I've tried to define here what I think a typical serverless application might look like. And this is, again, AWS based, because that's my area of expertise. However, this kind of thing would look very similar in other clouds as well.

It’s worth pointing out that this is really a simplified diagram (above). In reality, you might have other things like object storage in S3 or you might be using AWS Secrets Manager to store database credentials that these Lambda functions can obtain at one time to access this and several other things.

But the point is really that all of these things, or at least most of these things need permission sets. This takes the form of IAM policies. So you've got your API, which needs a resource policy, all of your Lambda functions need to have an attached IAM role, which defines what they're allowed to do.

Permissions and Policies

Even with this simple architecture, you've got quite a lot of complication in there in terms of the number of policies and permission sets that you need.

There's a resource policy that you can define on the Lambda functions, which defines what has access to those Lambda functions and what access they have.

In your message queue you've got an access policy which defines what other components in the cloud have access to that queue, and what access they have. You've got KMS encryption keys as well, that need to have a key policy attached to define the various permissions on them.

Example: AWS DynamoDB Permissions

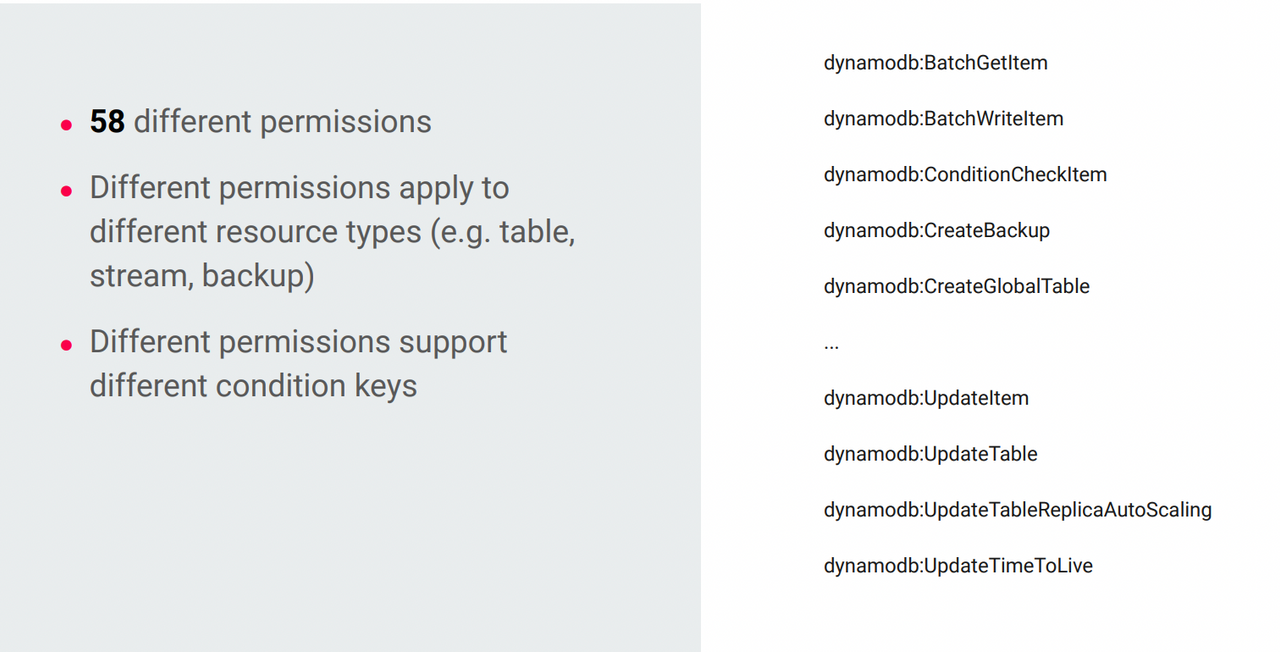

Let’s take the example of AWS DynamoDB.

Imagine you are a developer and you are writing a policy for your Lambda function that needs to access DynamoDB and you need to adhere to least privilege.

You need to give it the exact permissions that it needs to do only the things that it needs and nothing else. There are 58 different permissions in DynamoDB and some of these permissions apply to different resource types. So, the DynamoDB service is made up of tables, streams, backups, and various other things. You've also got the concept of condition keys that allow you to grant access, but only under certain conditions. And you've got different condition keys, so you can use some condition keys with some permissions, but not others.

Here are some examples of what a bad policy looks like, what a slightly better policy looks like, and what a good policy looks like for a Lambda function that needs to retrieve items from a particular DynamoDB table.

As you can see, the first policy allows full access to all Dynamo tables, which is way too wide-ranging a permission set for the task you’re trying to achieve.

The second is slightly better in that it only allows you to get items, rather than write, update, delete, etc, but it still allows the Lambda function to get items from any Dynamo table, and so has plenty of potential to cause damage should it be compromised.

The final permissions set it where you start getting into ‘good’ territory, the Lambda function with the policy attached can only get items from a specific Dynamo table, which really is the only permission it needs in this case.

How to Safely Navigate the Observability River: Your Complete Guide to Monitoring & Observability

Everyone is looking for new ways to improve their platforms and applications, but where do you start?

We’ve got to look at the picture as a whole… It’s time to take a trip down the Observability River.

But What Happens When You Don't Achieve Least Privilege?

Before we get into that, it's important to identify what our general concerns are when we're talking about security. Generally we talk about three main properties of security. I've referred to security properties of data here, because in my opinion, when you discuss security, you are generally talking about data.

These are the three main properties of data that we are generally concerned with are:

1. Confidentiality

Confidentiality means that the data is only accessible to authorised entities. This is a concern that most people have when they think about data. When unauthorised people do get access to their data, it's known as a disclosure. So if you break confidentiality, the data's been disclosed in an unauthorised way.

2. Integrity

Integrity states that the data can't be changed by unauthorised entities. This concern gets talked about a bit less, but it's potentially even more serious in circumstances where people can go into your database and just start changing data; there's a lot of harm that they can do in this case. Breaching integrity is known as unauthorised alteration.

3. Availability

Availability means that data can be accessed when and where it's needed. In modern applications, this is really important in terms of data, because these applications are often highly data-driven. An example of an attack on availability would be a “denial of service” attack whereby an attacker overwhelms your application and stops it from running because it simply can't service the number of requests that are being made to it. In the serverless world, this kind of attack is more often known as a “denial of wallet” attack because serverless applications are so scalable that they can still service a very high number of requests, but at the end of the month (or whenever your billing period is) you'll get a huge bill because you've been using a lot of resources to serve those request. A breach of availability is generally known as destruction.

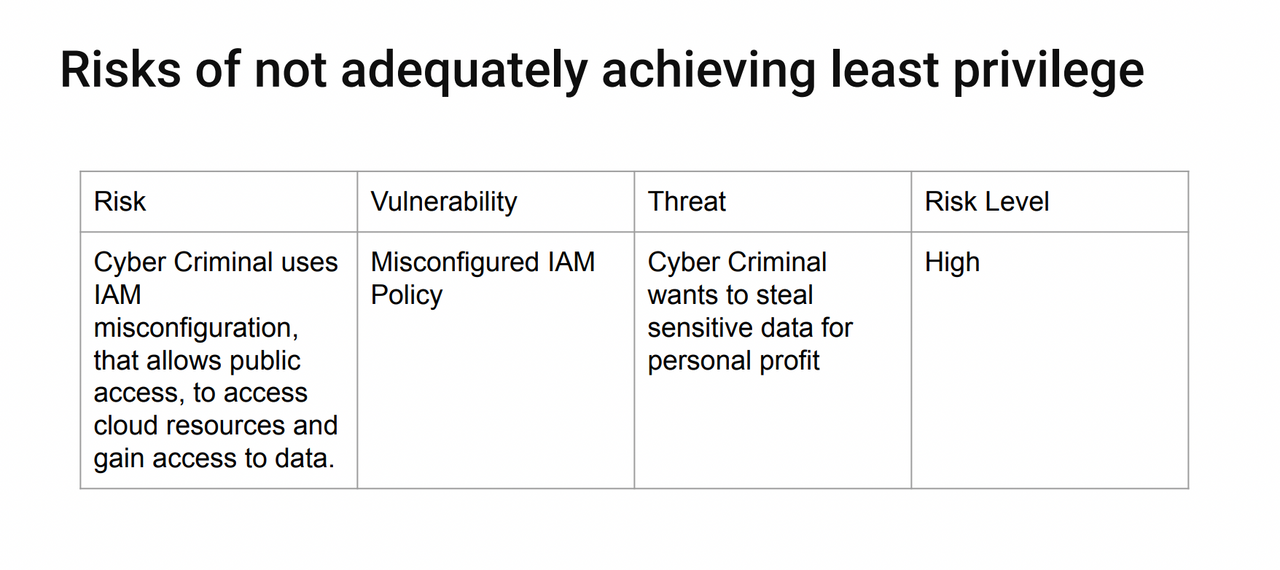

A risk generally relates to a beach of one of more of these security properties—confidentiality, integrity, and availability. In this diagram our risk is related to confidentiality. A cyber criminal gets access and uses a misconfiguration to get access to a cloud resource and gets access to data. The data is exposed, so there's an attack on integrity here as well, if they can manipulate that data.

Next we have our vulnerability. A vulnerability is a weakness in a system that can be exploited to cause damage to an asset.

The threat generally has an attacker. In this case, the generic cyber criminal is our attacker. They have a certain aversion of risk and they have a certain frequency in which they'll conduct this attack.

These two things, the vulnerability and the threat, combine to form the overall risk. Identifying threats and seeing which threats can use which vulnerabilities to then cause a risk and evaluating those risks to give you a risk level.

Similar examples could be made for different types of attackers, targeting slightly different assets, but when we're talking about least privilege, the vulnerability is generally related to misconfiguration of an IAM policy, or a networking rule. That allows the attacker an entry point into the application or cloud platform. And from there, they can conduct attacks.

Once you have a good idea of the security risk you're facing, you can start to look at the controls that you can put in place to mitigate them.

Security Controls

Here’s a list of security controls that could be put in place to help better achieve least privilege.

IAM Access Analyzer

The IAM Access Analyzer from AWS contains a number of different features that can help you prevent bad security practices and also help you to identify possible vulnerabilities both in your existing application and prior to implementing changes.

Shift Left Approach

A shift left approach to security means giving consideration to security earlier in the development life cycle. DevSecOps also really advocates for continuous security testing. These two things combine to deliver a state where security is built into requirements. You are shifting your requirements left into the original requirements, so they're embedded in the tickets that are going to be worked on by the engineers and the team. That security is measured and evaluated in the form of DevSecOps whenever changes to the application are made. This allows a better security baseline to be reached, and maintained going forward.

Static Analysis Tools

Typically modern cloud infrastructures are built using infrastructure as code. AWS have their CloudFormation service, which allows you, in either JSON or YAML, to define what your infrastructure should look like (the properties of your S3 bucket or your Lambda function etc.). What has now been built on top of that are static analysis tools, such as cfn-nag and CloudFormation Guard, which will scan your static code and for vulnerabilities and identify vulnerabilities in that code.

In my opinion, developers who are developing infrastructure code should all be encouraged to run these tools locally, as it helps you to identify problems at the earliest possible opportunity and fix them at that point, rather than fixing them later down the line, which is going to be a lot more effort for you.

Q&A

Which Scanning Tools Would You Recommend?

I think there's no one particular tool. If you're using CloudFormation, check out cfn-nag and CloudFormation Guard. If you happen to be using Terraform for your infrastructure code, you might choose a different tool, such as Checkov. If you're working in a different cloud platform, then you might choose another tool again. But I think it's about finding the right tool for your team. Try to evaluate a couple of different tools and choose the right one for you and your team to accomplish what you want to do.

How Do You Determine the Vulnerabilities and Threats in Your Particular System?

I think this one has got to be a bit of a collaborative effort. So, sitting down as a team, looking at your architecture diagrams and thinking. To start with we need to look at where the weak points in your system are—where could your system be attacked? And what are the assets (this is usually data) that they’d want to attack?

You then need to think about those vulnerabilities and try to uncover who exactly the threat actors that would want to attack your system are and how they would go about achieving the attack. What method would they use?

Then building up that picture once you've got those weak points, and you've got the threat, you can think about which of those threats could potentially exploit. And then if you put those two things together, you've got a risk.

For more information and a deeper understanding of how to secure serverless applications using the principle of least privilege, watch Mark Faiers’ full webinar here: