Top 10 Data Trends From AWS Summit ANZ

AWS Summit Online ANZ took place on 18/19 May—bringing together cloud experts and enthusiasts virtually, from across Australia and New Zealand.

We enjoyed two days packed full of interesting talks including keynotes and educational sessions from industry leaders.

Contino was excited to host two sessions which you can watch back here:

- Build Cloud Operating Models for Highly Regulated Enterprises

- Using DataOps to Reduce Friction for a Competitive Advantage

Each year, the purpose of the event is to deepen users’ understanding of AWS products and services and to help you to ‘accelerate your future in the cloud’.

This year, we were happy to see a big focus on data—data science, ML, AI and data analytics. A good proportion of talks focused on the common pitfalls of modern data platforms and how best to iteratively implement systems that scale.

In this blog, we’ve summarised the key takeaways, with regards to rising data trends on AWS, from across the two days.

Our Top Picks: 10 Best Talks from AWS Summit Online ANZ

It was impossible to catch every session, but we particularly enjoyed the following talks which focused on the importance of a data-first cloud strategy:

- Developing fast and efficient data science while ensuring security and compliance

- Making the event-driven enterprise a reality using AWS streaming data services

- A/B testing machine learning models with Amazon SageMaker MLOps

- Using reinforcement learning to solve business problems

- Intelligent document processing using artificial intelligence

- Re-thinking analytics architectures in the cloud

- Data engineering made easy

- Lakehouse architecture: Simplifying infrastructure and accelerating innovation

- Data governance at Scale on AWS

- Reimagine the role of the data analyst

Here are the top ten data trends you should take away from these talks.

Top 10 Data Trends and How to Leverage Them on AWS

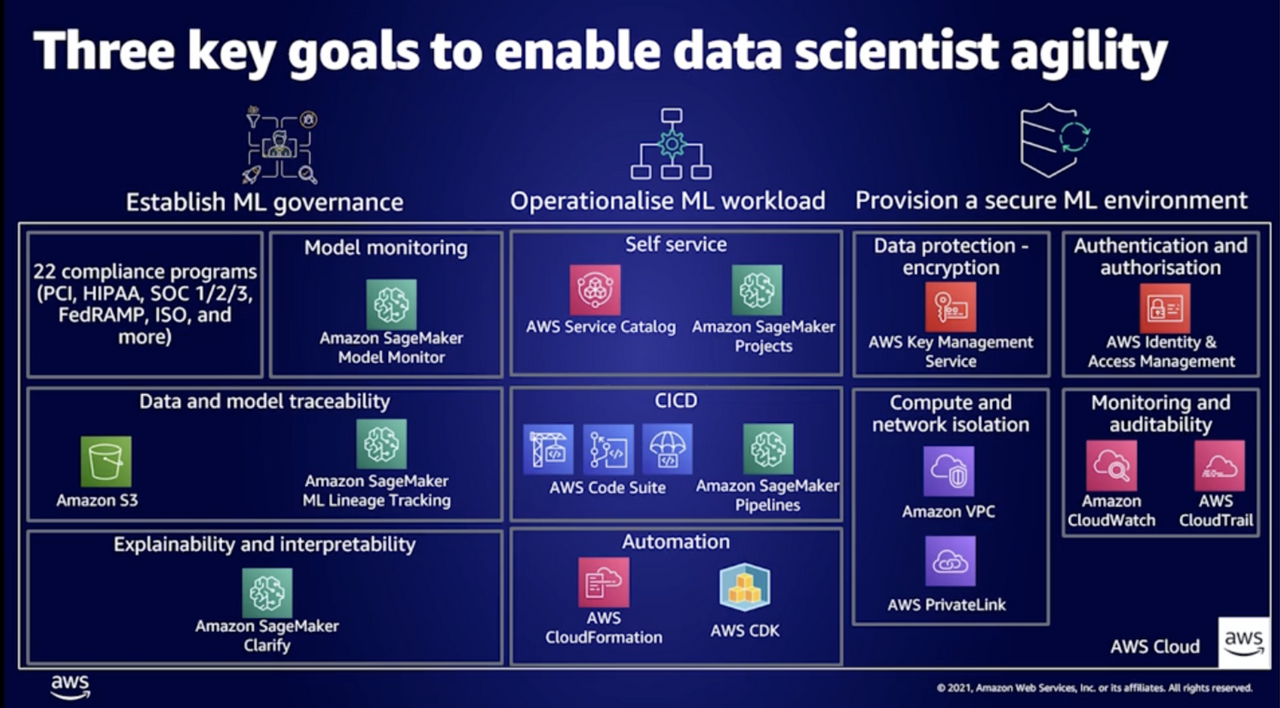

1. Balance ML Agility with IT governance

Watch the talk on demand: Developing fast and efficient data science while ensuring security and compliance

Highly regulated industries such as financial services and the public sector have to follow a set of compliance requirements around access management, data security, model lineage, auditability and explainability.

Combining this with the complex development process of ML solutions—where the ML code is just a small part of the overall deployment process—results in up to 85% of ML models never making it to production.

One way to solve this is by balancing ML agility with IT governance.

Key Takeaways:

- Productionising ML is challenging in most cases, more so in highly regulated environments

- Three ingredients necessary for ML agility are:

- Establishing ML governance

- Operationalising ML workloads

- Provisioning a secure ML environment

- There is an easy way to start using MLMax, a series of templates to accelerate the delivery of custom ML solutions to production

Services:

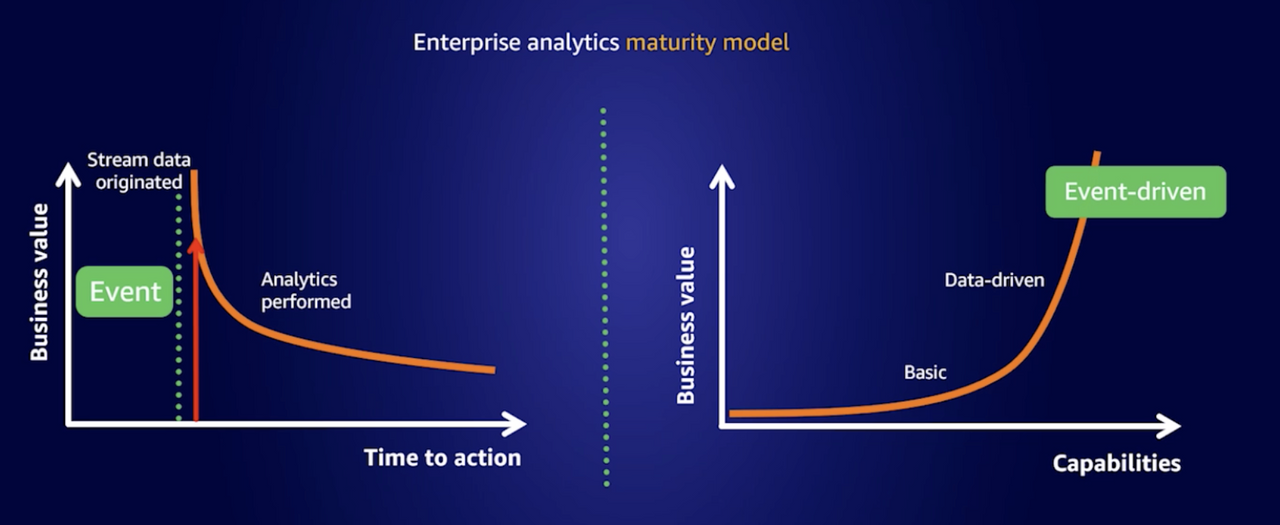

2. Leverage event-driven capabilities to stay competitive

Watch the talk on demand: Making the event-driven enterprise a reality using AWS streaming data services

Enterprises such as mining, telecom, logistics, energy, financial services are embarking on a digital transformation journey in order to stay competitive in the market. These enterprises produce a large variety of event data at high speed from IoT devices, enterprise apps, social media and application logs.

The business value of event-driven use cases decreases the more time it takes to perform analytics on the data, and business value increases as the event-driven capabilities of an organisation develop.

The talk walks you through four prevalent event-driven patterns, providing a reference architecture for them namely: IoT, Clickstream, Log Analytics and Change data capture.

Key Takeaways:

- Capabilities required for becoming event-driven:

- Stream ingestion

- Stream storage

- Stream analytics

- Stream integration (to deliver it to data lake for example)

- The outcomes of event-driven capabilities:

- Automatic decision making

- Interactive dashboards

- Alerting

- Real-time ML interface

- Benefits of AWS Streaming Services:

- Easy to use

- Seamless integration

- Fully managed

- Secure & compliant

Services:

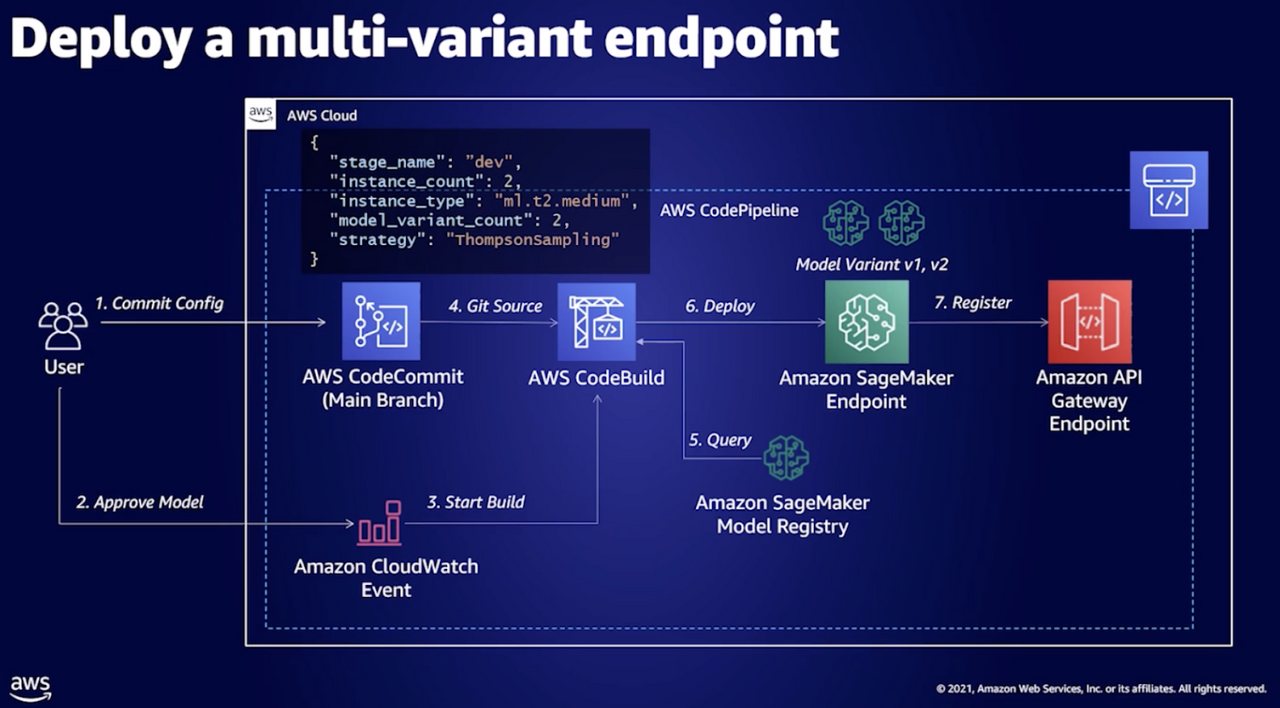

3. SageMaker makes it easy to A/B test new machine learning deployments

Watch the talk on demand: A/B testing machine learning models with Amazon SageMaker MLOps

In the context of machine learning, A/B testing allows you to see how a new ML model performs against an existing one in production by transferring some of the traffic to your new ML model.

For example, you might show the recommendations from a new recommendation engine to a random subset of your users and see if they engage more with the new recommendations than users who are being shown those from the old engine. If you see a substantial increase in engagement, you can replace the old model with your new model for all users.

This talk gives an overview of A/B testing, and how multi-armed bandits offer an improved way of balancing traffic to old and new models by progressively transferring traffic to the better performing model.

Where things get really cool in this talk is that they show how easy it is to set up Amazon SageMaker Endpoints to accept multiple production models and automatically distribute traffic based on your desired A/B testing or multi-armed bandit approach.

What would typically be a complex process becomes as simple as specifying parameters to tell SageMaker Endpoints how to distribute traffic to your deployed models.

Key Takeaways:

- A/B Testing is useful to test model performance

- A/B Testing is simple to achieve using SageMaker

Services:

- Amazon SageMaker

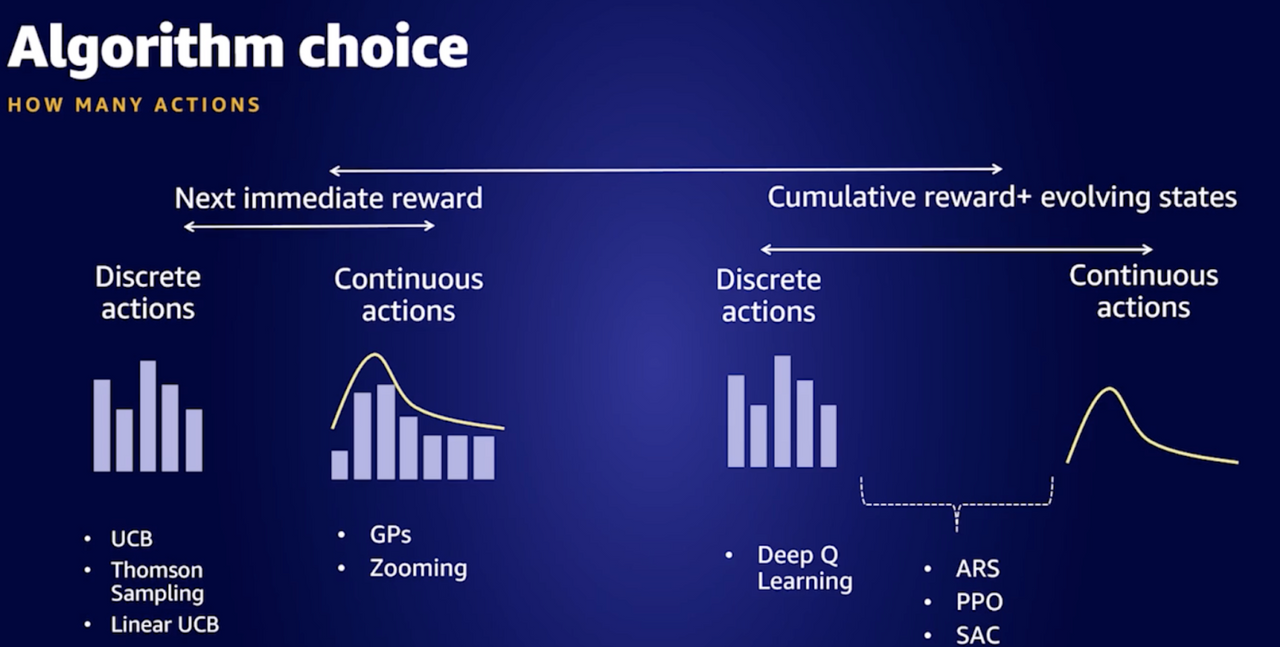

4. Reinforcement learning is ready to be productionised for business use cases

Watch the talk on demand: Using reinforcement learning to solve business problems.

Reinforcement learning (RL) involves a machine interacting with its simulated environment and learning through trial and error.

For a long time, RL was seen as a tool for creating AI for games such as chess and go. However, it has increasingly been showing up in business use cases. This talk covers real-world experience in using RL for business use cases and how to get RL models into production.

Key Takeaways:

- The four recommended steps for productionising RL:

- Formulate the problem (understand the environment, the available actions and what to optimise)

- Build a training and evaluation system. You need to be able to simulate how actions impact the environment (informed/validated by past data and SMEs)

- Try out different RL algorithms

- Phase the RL model into use (e.g. using A/B testing) and potentially keep an operator in the loop to validate critical decisions.

Services:

- Amazon SageMaker

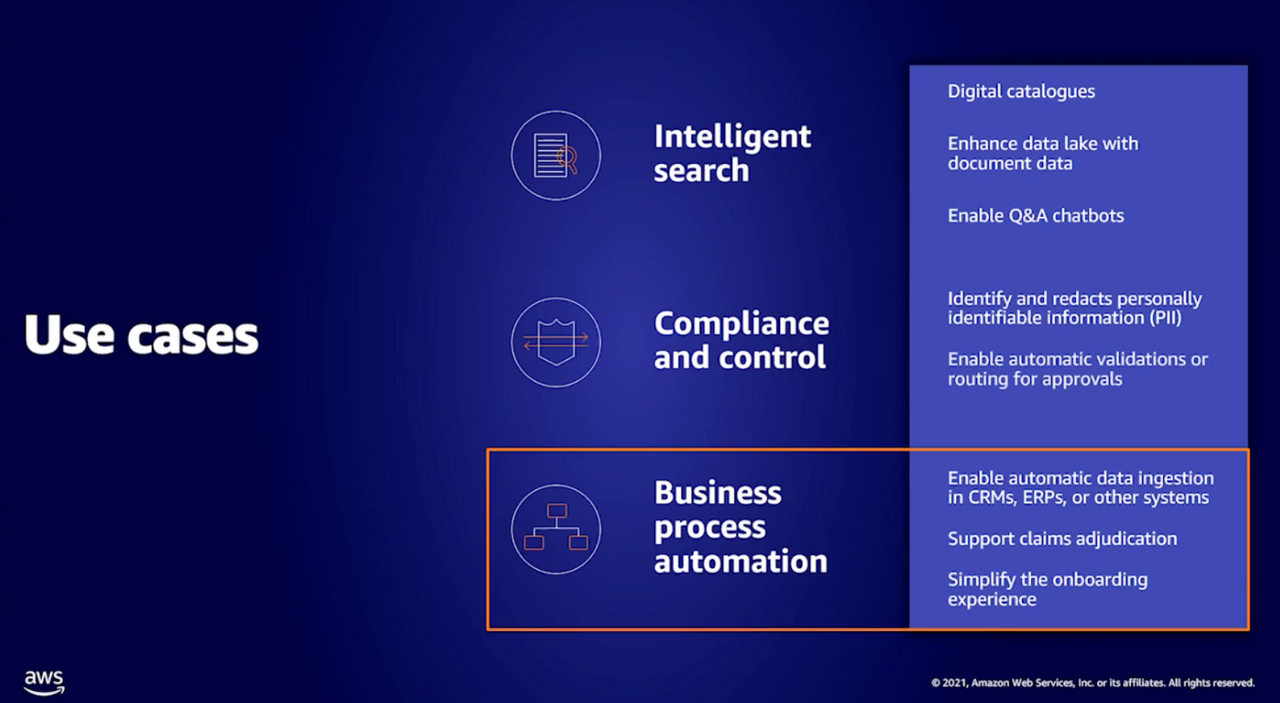

5. Automate document processing

Watch the talk on demand: Intelligent document processing using artificial intelligence

In 2021, digital transformation initiatives in more than three-quarters of enterprises will focus on automation with a fifth of enterprises expanding investment in intelligent document extraction.

ML can be used to drive significant operational efficiencies where documents are involved.

Extracting information manually from documents can be time-consuming, expensive, difficult to scale and result in negative customer experience.

Key Takeaways:

- Some core issues the industry face include:

- Variety

- Complexity

- Diverse sources

- Benefits of intelligent document processing include:

- Scalability and fast processing

- Improved consistency and lower error rate

- Lower document processing cost

- Better customer experience

- No ML experience required to get started

Services:

6. Decouple your cloud architecture

Watch the talk on demand: Re-thinking analytics architectures in the cloud

Lifting and shifting an on-prem data platform to the cloud rarely achieves the benefits you can get from investing in new cloud technologies. The cloud presents an opportunity to rethink data platform design in order to achieve best price performance.

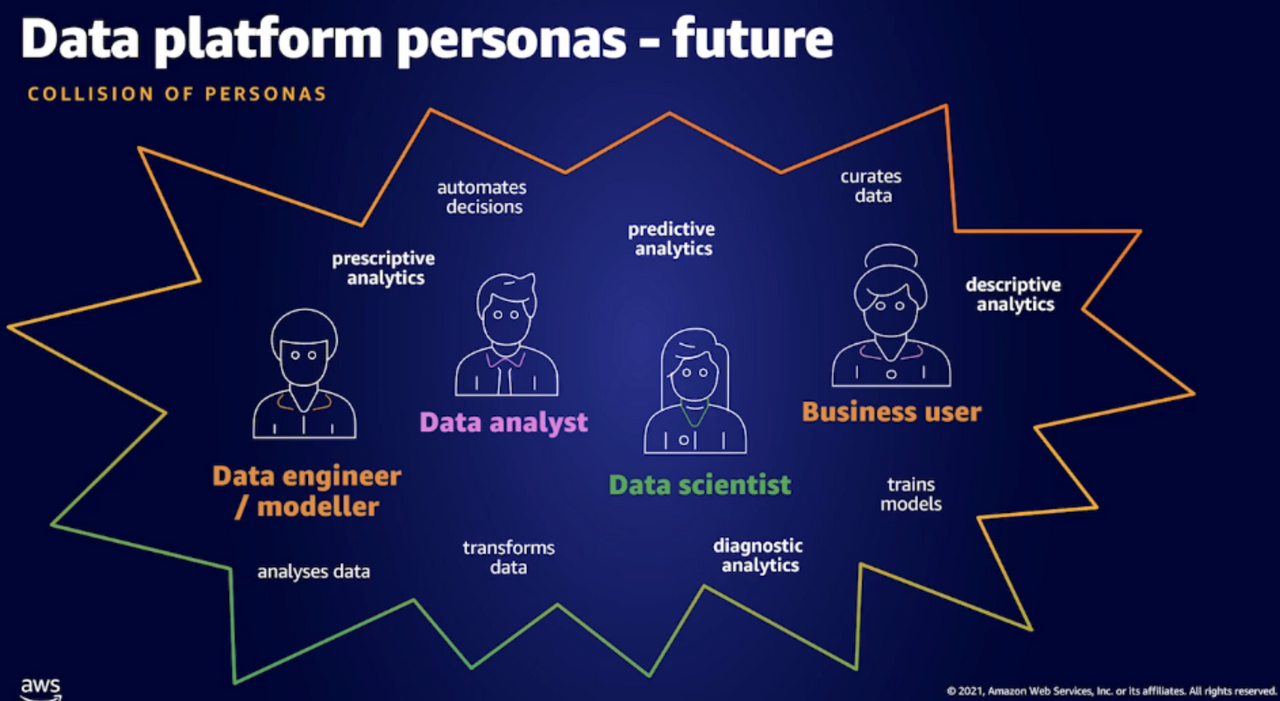

The talk stresses the importance that architecture is ever-changing to help support the needs of dynamic businesses. It calls out some key personas using data platforms and how their requirements can be satisfied with new features or new AWS services.

With over 200 services, the AWS service offering can be overwhelming. The talk is structured in such a way to map business requirements to conscious architectural decisions starting from a very simple design, adding more services and features to eventually land on a fairly complex but perfectly fit for purpose architecture.

Key Takeaways:

- Designing data platforms in the cloud is different than on-prem. Purposefully decoupling your architecture allows you to progressively extend and scale your platform as the need arises

- As a result of the inherent complexity of data platforms, end state architectures can come across as overwhelmingly complex. Consciously mapping services back to requirements and best practices help ensure the right tools are used for the right job and serve as a great communication tool

- More personas are interested in data now than ever before and more will exist in the future. Architect data platforms must accommodate this by following best practices.

- New services and features included AWS DataBrew and Redshift data sharing. Both of these services were well explained and mapped back to use cases. The architecture did however not use AWS Glue, but promoted EMR. DataBrew had some very interesting data quality check features which would be extremely useful for data platforms.

Services:

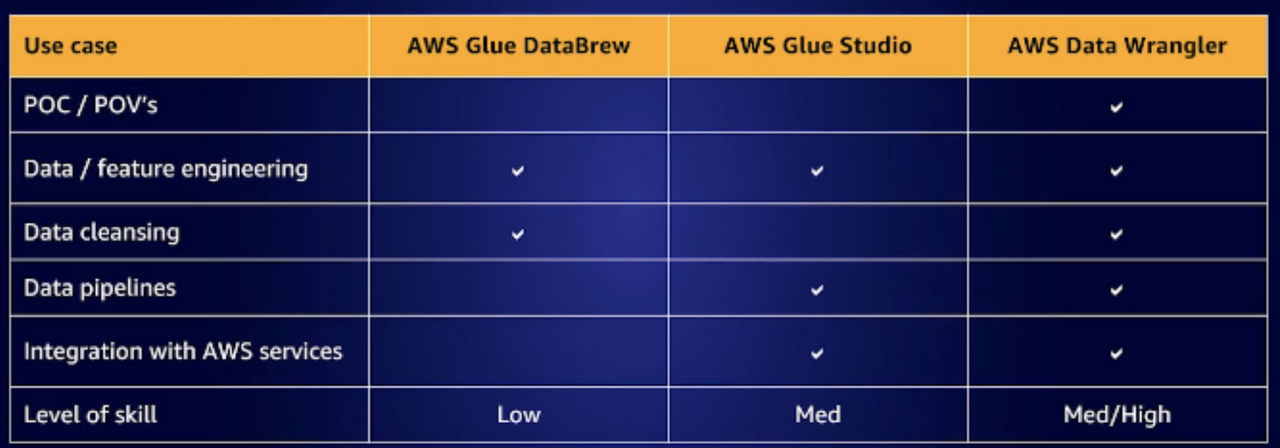

7. New data engineering services released

Watch the talk on demand: Data engineering made easy

Working with data is still very challenging and time consuming. AWS has been and is continuing to develop services in this space to support data engineering and related activities.

This talk focused on the three new services AWS is releasing to support users in this space. It continues to specify who the main users would be and the main use cases that can be solved. There are also some great demos on all three tools.

AWS Glue DataBrew: Self Service, low code data wrangling for everyone.

AWS Glue Studio: Visually create spark jobs for new data engineers.

AWS Data Wrangler: Accelerate your development (think jet fuel) for seasoned data engineers, developers and scientists.

Key Takeaways:

- AWS is investing heavily in satisfying the requirements of the ever-growing user base of data users. There are three new services, each aimed at a different user persona but in some cases with a healthy level of overlap

- AWS Glue DataBrew - A visual, no code data prep tool that can profile, clean, map and track lineage and be automated (CICD). The main users would include BI Developers, Business Users and Analysts and Scientists

- AWS Glue Studio -Visually create job flows executing spark, monitor job performance, execute and monitor job runs. User personas would include data engineers or SQL developers new to spark

- AWS Data Wrangler (The one I am personally really excited about) - A library that accelerates development, includes code snippets, and to access data and other open source libraries. Great for POCs and Demos (and prod). Integrates with AWS Services

- Analysts / scientists / engineers

- Developers

Services:

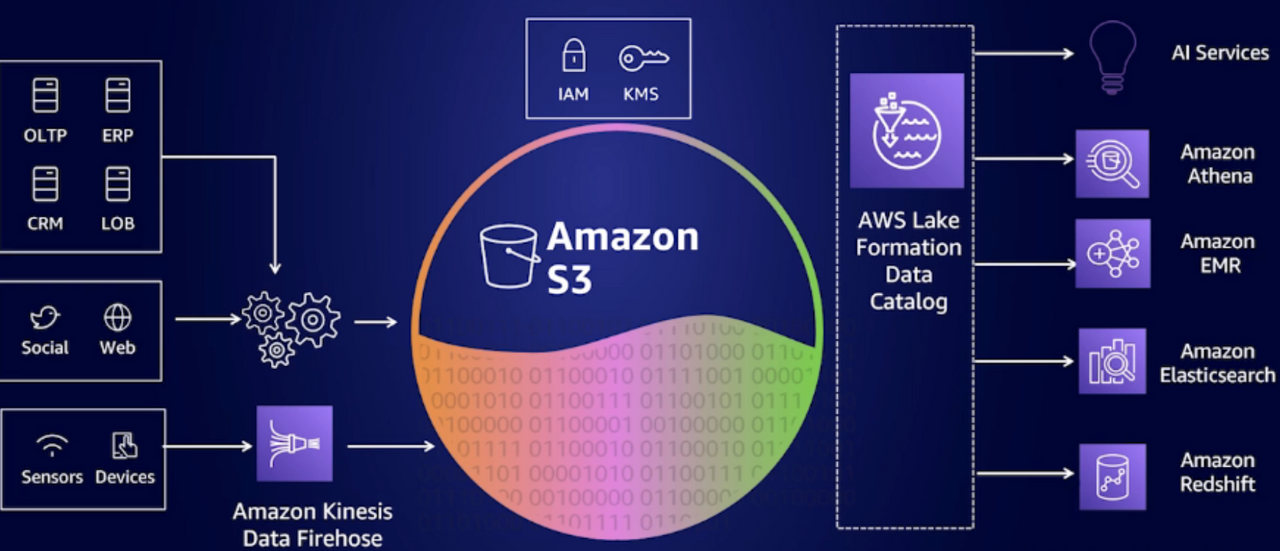

8. Lakehouse to allow for performance and cost tradeoffs

Watch the talk on demand: Lakehouse architecture: Simplifying infrastructure and accelerating innovation

With the rate of and complexity of data growth, legacy architectures and designs simply won't be able to support ever-changing business demand. A new paradigm termed Lakehouse is emerging and proving extremely successful.

Lakehouses support business by centrally storing data in open formats and enabling access through a variety of mechanisms to allow for performance and cost tradeoffs. Lakehouse architecture allows scalability, creating a single source of the truth, unifying security and governance whilst keeping costs justifiable. A lakehouse is the ultimate collaboration tool and enables true democratisation, a fundamental requirement for being a data driven business.

Redshift is a fantastic tool as part of the Lakehouse architecture specifically when using Redshift spectrum to query data in place (in any format including DeltaLake, Hudi and Parquet). Redshift additionally allows querying data directly from RDS and aurora with Federated query. This, for example, would allow users to create materialised views with data obtained directly from source (RDS and Aurora), integrated with internal data from Redshift being refreshed daily.

Key Takeaways:

- Lakehouse is emerging as the answer for many existing challenges

- A lakehouse achieves scalability, seamless data movement, unified governance, uses purpose built data services and is performance and cost effective

- Redshift Spectrum enables reading data in place stored as parquet, Hudi or Delta. Redshift also applies access controls

- Cold data can be offloaded from redshift into s3 and queried through Spectrum to be more cost effective

- Federated Query enables ‘virtualisation’ as you can query data in operation systems like aurora, RDS for PostgreSQL and Datalake. This approach can significantly speed up getting data to users who need it and would be an ideal fit for POCs and experiments

- AWS Lake Formation is continuing to mature specifically in the data access space allowing fine grained and centralised access control

Services:

- Amazon Redshift

- Amazon RDS

- AWS Lake Formation

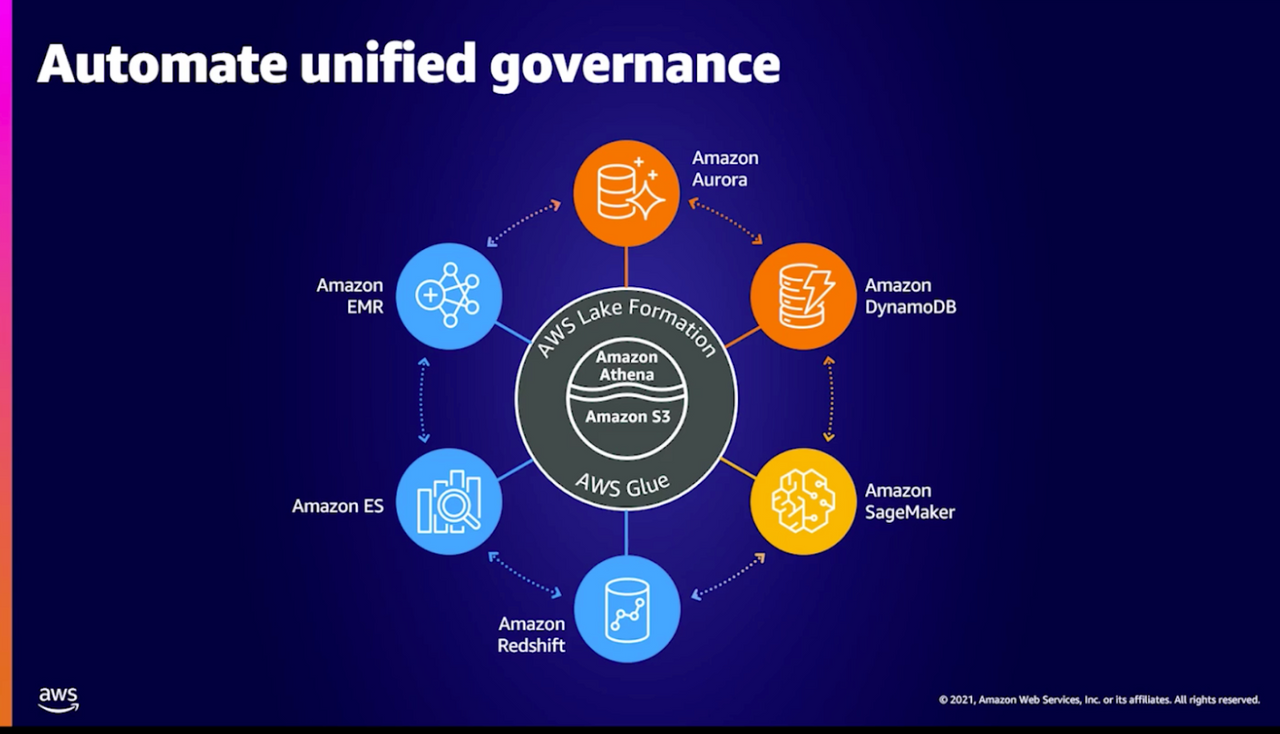

9. Governance is about enabling the usage of trusted data

Watch the talk on demand: Data governance at Scale on AWS

Customers want more value from their data but they struggle to capture, store and analyse all the data generated by modern businesses. 85% of businesses want to be data driven yet only 37% consider themselves successful. In order to be truly data driven, good data management and governance is critical. Many existing data governance frameworks are not set up to deal with the rapid growth of data, currently estimated to double every two years.

The definition and value of data governance differs widely based on the organisation and the role of users within the organisation. A course outcome however is enabling usage of data that can be trusted.

Key Takeaways:

- Some key governance activities include:

- Curation of a decentralised data community for many interactions between producers and consumers

- A focus and move to platform level governance on only the most valuable data

- Automate the implementation of data governance and analytics engagement

- The lakehouse architecture acknowledges that taking a “one size fit all” approach to analytics, eventually leads to compromises

- AWS Lake Formation is a powerful tool that can aid in automation and unification of data governance. It achieves this by:

- Allowing users to centrally define security, governance and auditing

- Consistently enforcing policies

- Integrating with security, storage, analytics, ML services

- Allowing fine grained permissions on databases, tables and columns

Services:

- AWS Lake Formation

10. Data Analysts, the glue between business and IT

Watch the talk on demand: Reimagine the role of the data analyst

Data analysts drive analytics within organisations. Beyond this, they enable organisations to scale the value of their data by ensuring it is widely being used. Analytics come in many forms and can be categorised as descriptive, diagnostic, predictive or prescriptive. The various roles and personas that interact as a data team are ever expanding. Not only are new personas being added but the overlap in skills and responsibilities is growing.

A Data analyst might deploy a machine learning model and a business user could access data using SQL to perform analytics tasks. Data analytics are becoming increasingly accessible across the organisation.

The key enablers for wide scale data analytics include cloud technologies, low code and no code technologies and the emergence of augmented analytics.

Key Takeaways:

- Small changes can make a massive impact on the bottom line

- Key data driven decisions can make a big impact on the top line

- Data and Analytics is core to innovation and monetising data

- More and more personas are coming into existence along with more and more tools to augment their ways of working are being released by cloud providers

Services:

- Amazon Redshift

- Amazon RDS

- AWS Lake Formation