Top 3 Terraform Testing Strategies for Ultra-Reliable Infrastructure-as-Code

I love Terraform.

Aside from CloudFormation for AWS or OpenStack Heat, it's the single most useful open-source tool out there for deploying and provisioning infrastructure on any platform. However, there is one pattern that I've noticed since using Terraform that worries me:

terraform plan # "looks good; ship it," says the engineer!

terraform apply

This probably isn't a problem if you're deploying to a single rack in a datacenter or a test AWS account with really limited privileges. You can't do too much harm there.

But what if you're deploying with an all-encompassing "production" account or to an entire datacenter? Doesn't this seem pretty risky?

Unit and integration testing solves this problem. "Unit testing, like for apps?", I hear you say? Yup, that unit testing!

This post will briefly cover what unit and integration testing is, the challenges involved in doing these against infrastructure and testing strategies that I've observed in the field. This post will also briefly cover deployment strategies for your infrastructure as they relate to testing. While a healthy dose of code is used to drive these talking points, no technical knowledge is required to follow along.

Need a way to visualise how you test and how your tests integrate with one another? Check out the Testing Pyramid.

Want more Terraform? Check out our related Terraform blogs:

- A Model for Scaling Terraform workflows in a Large, Complex Organization

- Using Terraform to enforce AWS infrastructure best practices

- Leveraging HashiCorp Terraform to Make AWS Spot Instances More Effective

- Terraform: Cloud Made Easy

- The Ultimate Guide to Passing the HashiCorp Certified Terraform Associate Exam

A Brief Intro to Software Testing

Software developers use unit testing to check that individual functions work as they should. They then use integration testing to test that their feature works well with the application as a whole. Let's use an example to illustrate the difference.

Let's say that I was writing a calculator app in a fictional pseudo-language and wrote a function that adds two numbers. It might look like this:

function addTwoNumbers(integer firstNumber, integer secondNumber) {

return (firstNumber + secondNumber);

}

A unit test that ensured that this function worked as it should might look something like this:

function testAddTwoNumbers() {

firstNumber = 2;

secondNumber = 4;

expected = 6;

actual = addTwoNumbers(firstNumber, secondNumber);

if ( expectedResult != actualResult ) {

return testFailed("Expected %s but got %s", expected, actual);

}

return testPassed();

}

As you can see, this test only focuses on testing that addTwoNumbers, well, adds two numbers. While this might seem trivial, many of the large project failures that have happened over the years occurred because tests like these were missing!

Testing that this function works across the application as a whole is trickier, however, as doing this successfully heavily depends on the application and its dependencies. Integration testing a calculator app is somewhat straightforward:

Calculator testCalculator = new Calculator();

firstNumber = 1;

secondNumber = 2;

expected = 3; actual = testCalculator.addTwoNumbers(firstNumber, secondNumber);

# add other tests here

Test testAddTwoNumbers {

if ( expected != actual ) {

testFailed("Expected %s, but got %s.", expected, actual);

} testPassed();

}

As you can see, we create a testCalculator object based on the class from which it was created and ensure that, amongst other things, it can add two numbers like you'd expect. While the unit test tested this function in isolation of the rest of the Calculator class, the integration test brings it all together to ensure that changes to addCalculator don't break the entire application.

“I deploy infrastructure, Carlos. Why should I care about this?”

Here's why.

Have you written a script like this...

function increaseDiskSize(string smbComputerName, string driveLetter)

{

wmiQueryResult = new wmiObject("SELECT * FROM WIN32_LOGICALDISK WHERE DRIVELETTER = \"%s\"", driveLetter)

if ( wmiQuery != null ) {

# do stuff

}

}

...and hoped that it worked as it should? Did you re-run it a few times and change some things on that ‘lab’ box that you ran this against until you got the result you wanted? Did you run this during a change window against a ‘production’ machine, only to be surprised that it didn't work as you intended? Did you lose an evening or weekend to this?

If so, then can you spot what's wrong with this pattern?

terraform plan # "looks good; ship it," says the engineer!

terraform apply

It's the same thing!

Terraform Testing Strategy #1: Eye-Balling It

Being able to use terraform plan to see what Terraform will do before it does it is one of Terraform's most stand-out features. As such, it was designed for practitioners to see what would happen before a run occurred, check that everything looks good before applying it and then running terraform apply to add the finishing touches.

Let's say you're deploying a Kubernetes cluster on AWS with a VPC and an EC2 keypair. The code for this might look like this (without workers):

provider "aws" {

region = "${var.aws_region}"

}

resource "aws_vpc" "infrastructure" {

cidr_block = "10.1.0.0/16"

tags = {

Name = "vpc.domain.internal"

Environment = "dev"

}

}

resource "aws_key_pair" "keypair" {

key_name = "${var.key_name}"

public_key = "${var.public_key}"

}

resource "aws_instance" "kubernetes_controller" {

ami = "${data.aws_ami.kubernetes_instances.id}"

instance_type = "${var.kubernetes_controller_instance_size}" count = 3

}

If you're eyeballing it, you would run terraform plan, confirm that it does what you'd expect (after providing variables accordingly) and execute terraform apply to apply your changes.

Advantages

Really quick development time

This works well if your scale is small or if you're writing a quick configuration to test something new. The feedback cycle is really quick (write code, terraform plan, check, then terraform apply if it's good); consequently, this enables you to churn new configurations rather quickly.

Shallow learning curve

You can have anybody with any familiarity with Terraform, programming or systems administration do this. The plan tells you (almost) everything that you need to know.

Disadvantages

It's hard to spot mistakes this way

Let's say you read the whitepaper on Borg and, in kind, wanted to deploy five replicas of your Kubernetes master instead of three. You check the plan really quickly and apply it, thinking that all is good. Except, wait! I deployed three masters instead of five!

The remediation is harmless in this case: change your variables.tf to deploy five Kubernetes masters and redeploy. However, the remediation would be much more painful if this were done in production and your feature teams had already deployed services onto it.

It doesn't scale well

Eyeballing works well when you're just getting started with Terraform, as you're not deploying very much through it in the beginning or you are, at best, using it for a very small portion of your infrastructure.

Things get much trickier once you begin deploying between tens and hundreds of different infrastructure components with tens or hundreds of servers each. Modules are a good example of this. Take a look at this configuration:

# main.tf

module "stack" "standard" {

source = "git@github.com:team/terraform_modules/stack.git"

number_of_web_servers = 2

number_of_db_servers = 2

aws_account_to_use = "${var.aws_account_number}"

aws_access_key = "${var.aws_access_key}"

aws_secret_key = "${var.aws_secret_key}"

aws_region_to_deploy_into = "${var.aws_region}"

…

}

It looks pretty straightforward: it tells you where the module is and what its input variables are. So you go to that repository to learn more about this module, only to find...

# team/terraform_modules/stack/main.tf

module "web_server" "web_servers_in_stack" {

source = "git@github.com:team/terraform_modules/core/web.git"

count = "${var.number_of_web_servers}"

type = "${coalesce(var.web_server_type, "IIS")}"

aws_access_key = "${var.aws_access_key}"

aws_secret_key = "${var.aws_secret_key}"

…

}

module "database_server" "db_servers_in_stack" {

source = "git@github.com:team/terraform_modules/core/db.git"

count = "${var.number_of_db_servers}"

type = "${coalesce(var.web_server_type, "MSSQLServerExp2016")}"

aws_access_key = "${var.aws_access_key}"

aws_secret_key = "${var.aws_secret_key}"

…

}

...more modules! As you can probably guess, trudging through chains of repositories or directories to find out what a Terraform configuration is doing is annoying at best and damaging at worst; performing this exercise during an outage can be costly!

Terraform Testing Strategy #2: Integration Testing First

To avoid the consequences from the approach above, your team might decide to use something like serverspec, Goss and/or InSpec to execute your plans first into a sandbox, automatically confirm that everything looks good then tear down your sandbox and collect results. If all of your tests pass, then you promote the deployment to a higher environment. If they fail, you create a defect story and remediate.

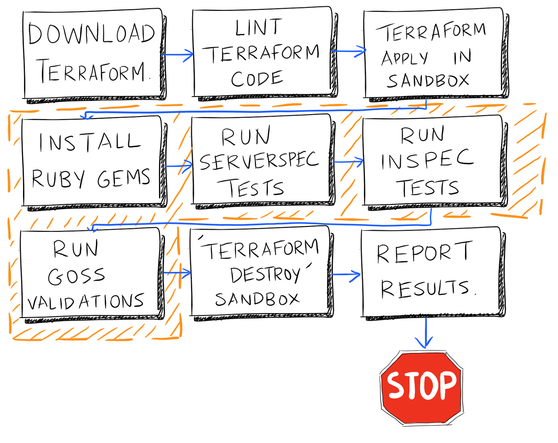

If this were a pipeline in Jenkins or Bamboo CI, it would look something like this:

Figure 1: An example of an infrastructure deployment pipeline with integration tests. Orange border indicates steps executed within sandbox.

This is a great step in the right direction, but there are some potential pitfalls to consider.

Advantages

Removes the need for long-lived development environments and encourages immutable infrastructure

A common practice I've seen amongst many IT teams is creating a long-lived development environment or 'sandbox' to test certain things like tool upgrades or script changes. These environments are typically stratified from production seriously enough to where changes that ‘work in dev’ break in production. This encourages ‘testing in production’, which opens the door to human error and, ultimately, outages.

Well-written integration tests provide enough confidence to do away with this practice completely.

Every sandbox environment created by an integration test will be an exact replica of production because every sandbox environment will ultimately become production. This provides a key building block towards infrastructure immutability whereby any changes to production become part of a hotfix or future feature release, and no changes to production are allowed or even needed.

To be clear, this does not mean that this does away with development environments for feature teams (though integration tests in software applications remove the need for development environments there as well; this is beyond the scope of this post, however).

Documents your infrastructure

You no longer have to wade through chains of modules to make sense of what your infrastructure is doing. If you have 100% test coverage of your Terraform code (a caveat that is explained in the following section), your tests tell the entire story and serve as a contract to which your infrastructure must adhere.

Allows for version tagging and "releases" of your infrastructure

Because integration tests are meant to test your entire system cohesively, you can use them to tag your Terraform code with git tag or similar. This can be useful for rolling back to previous states (especially when combined with a blue/green deployment strategy) or enabling developers within your organization to test differences in their features between iterations of your infrastructure.

They can serve as a first-line-of-defense

If your integration tests are mature enough, they can be used as an early warning of unexpected third-party errors.

To illustrate this, let's say that you created a pipeline in Jenkins or Bamboo that runs integration tests against your Terraform infrastructure twice daily and pages you if an integration test fails.

A few days later, you receive an alert saying that an integration test failed. Upon checking the build log, you see an error from Chef saying that it failed to install IIS because the installer could not be found. After digging some more, you discover that the URL that was provided to the IIS installation cookbook has expired and needs an update.

After cloning the repository within which this cookbook resides, updating the URL, re-running your integration tests locally and waiting for them to pass, you submit a pull request to the team that owns this repository asking them to integrate.

Congratulations! You just saved yourself a work weekend by fixing your code proactively instead of waiting for it to surface come release time.

Disadvantages

It can get quite slow

Depending on the number of resources your Terraform configuration creates and the number of modules they reference, doing a Terraform run might be costly. This can create very slow feedback loops whereby an engineer will make a change, wait for integration testing to finish, find out that their change broke stuff, then repeat. If she does this enough times, she will eventually find ways of working around these tests.

It can also get quite costly

Performing a full integration test within a sandbox implies that you mirror your entire infrastructure (albeit at a smaller scale with smaller compute sizes and dependencies) for a short time. Doing this to confirm simple changes can add up, especially if that mirror is sufficiently large.

This isn't as much of a concern for those running their platforms on bare metal, as one can apply their Terraform configurations against cold hardware and wipe as part of your terraform destroy. However, this feeds into the last point, as wiping and staging for your next test run can take some time.

Obtaining code coverage is hard

Unlike most programming languages or mature configuration management frameworks like Chef, Terraform doesn't yet have a framework for obtaining a percentage of configurations that have a matching integration test. This means that teams that choose to embark on this journey need to be fastidious about maintaining a high bar for code coverage and will likely write tools themselves that can do this (such as scanning all module references and looking for a matching spec definition).

Tools

kitchen-terraform is the most popular integration testing framework for Terraform at the moment. This is the same Kitchen that you might have used with Chef, and like Chef's test-kitchen, you can define how terraform should behave with .kitchen.yml and have kitchen take care of the rest.

Goss is a simple validation/health-check framework that lets you define what a system should look like and either validates against that definition or provides an endpoint, /health, that tells you whether that system is passing or failing to meet it. It is heavily inspired by serverspec, which accomplishes much the same thing (except the cool health-check webserver) and works seamlessly with Ruby.

Terraform Testing Strategy #3: Unit Tests

If you're a software developer on a Platform or Infrastructure team and are wondering "Well, I would usually write unit tests before writing integration tests. Is that a thing with Terraform?", thanks for reading this far!

As said before, integration testing enables you to test interactions between components in an entire system. Unit testing, on the other hand, enables you to test those individual components in isolation. They are meant to be quick, easy to read and easy to write. To that end, good unit tests can be run from anywhere, with or without a network connection.

Continuing with our Kubernetes cluster example, here is what a simple unit test suite would look like for our resources defined above with RSpec, a popular testing framework for Ruby:

# spec/infrastructure/core/kubernetes_cluster_spec.rb

require 'spec_helper'

require 'set'

describe "KubernetesCluster" do

before(:all) do

@vpc_details = $terraform_plan['aws_vpc.infrastructure']

@controllers_found =

$terraform_plan['kubernetes-cluster'].select do |key,value|

key.match /aws_instance\.kubernetes_controller/

end

@coreos_amis = obtain_latest_coreos_version_and_ami!

end

context "Controller" do

context "Metadata" do

it "should have retrieved EC2 details" do

expect(@controllers_found).not_to be_nil

end

end

context "Sizing" do

it "should be defined" do

expect($terraform_tfvars['kubernetes_controller_count']).not_to be_nil

end

it "should be replicated the correct number of times" do

expected_number_of_kube_controllers = \

$terraform_tfvars['kubernetes_controller_count'].to_i

expect(@controllers_found.count).to eq

expected_number_of_kube_controllers

end

it "should use the same AZ across all Kubernetes controllers" do

# We aren't testing that these controllers actually have AZs

# (it can be empty if not defined). We're solely testing that

# they are the same within this AZ.

azs_for_each_controller = @controllers_found.values.map do |controller_config| controller_config['availability_zone']

end

deduplicated_az_set = Set.new(azs_for_each_controller)

expect(deduplicated_az_set.count).to eq 1

end

end

it "should be fetching the latest stable release of CoreOS for region \

#{ENV['AWS_REGION']}" do

@controllers_found.keys.each do |kube_controller_resource_name|

this_controller_details =

@controllers_found[kube_controller_resource_name]

expected_ami_id = @coreos_amis[ENV['AWS_REGION']]['hvm']

actual_ami_id = this_controller_details['ami']

expect(expected_ami_id).to eq

expected_ami_id

end

end

it "should use the instance size requested" do

@controllers_found.keys.each do |kube_controller_resource_name|

this_controller_details =

@controllers_found[kube_controller_resource_name]

actual_instance_size =

this_controller_details['instance_type']

expected_instance_size =

$terraform_tfvars['kubernetes_controller_instance_size']

expect(expected_instance_size).to eq

actual_instance_size

end

end

it "should use the key provided" do

@controllers_found.keys.each do |kube_controller_resource_name|

this_controller_details =

@controllers_found[kube_controller_resource_name]

actual_ec2_instance_key_name =

this_controller_details['key_name']

expected_ec2_instance_key_name =

$terraform_tfvars['kubernetes_controller_ec2_instance_key_name'] expect(expected_ec2_instance_key_name).to eq

actual_ec2_instance_key_name

end

end

end

end

There are a few notable things to point out with this test:

It’s easy to read

We use natural English-like statements to express what the test is, what it's doing and what it should do. If we read this snippet from the above...

describe "KubernetesCluster" do

before(:all) do

@vpc_details = $terraform_plan['aws_vpc.infrastructure']

@controllers_found =

$terraform_plan['kubernetes-cluster'].select do |key,value|

key.match /aws_instance\.kubernetes_controller/

end

@coreos_amis = obtain_latest_coreos_version_and_ami!

end

context "Controller" do

context "Metadata" do

it "should have retrieved EC2 details" do

expect(@controllers_found).not_to be_nil

end

end

...we can see that we're describing our Kubernetes cluster, executing some statements before all other tests run, then running a series of controller-related tests, with this test focussing on metadata belonging to that Kubernetes controller. We're expecting that it should have receive details from EC2, and that those details are an array of controllers found.

It’s quick

Once we generate our Terraform plan (more on how to do this soon), this test runs in less than a second. There is no sandbox to query or AWS API calls to make; we're simply comparing what we wrote against what Terraform generated from that.

It’s easy to write

With a few caveats (see the Disadvantages section), all of these test examples are straight from RSpec's documentation here. There's no hidden methods or deeply-nested pitfalls unless you defined them explicitly beforehand.

So why should we unit test Terraform code?

Advantages

It enables test-driven development

Test-driven development is a software development pattern whereby every method in a feature is written after writing a test describing what that feature is expected to do. This ensures that every method that does something impactful has a test describing it. This is only possible if your unit tests are quick, as the feedback loop between running the test and writing code that makes it pass has to be quick, usually on the order of a few seconds.

What this means for Terraform configuration is that I can write something like this...

describe "VPC" do

context "Networking" do

it "should use the right CIDR" do

expected_cidr_block = $terraform_tfvars['cidr_block']

expect($terraform_plan['aws_vpc.vpc']['cidr_block']).to eq expected_cidr_block

end

end

end

...before I lay down my main.tf, run rake unit_test (or similar) to see it fail, then continue writing my main.tf until this test passes. Without even thinking about it, I (a) documented my infrastructure and (b) documented "what good looks like" in this context.

Faster than integration tests

Terraform unit tests work entirely off of your calculated Terraform plan. No additional compute is required to run them. This means that you'll catch errors in your Terraform code earlier and more often before spending money on a sandboxed testing environment.

Smaller than integration tests

Because unit tests only focus on a single implementational detail (such as ensuring that your VPC CIDR is correct or that your AWS security group is to specification), they enable you to write more of them. When combined with the integration tests described in the previous section, this testing framework makes for a very robust platform that can significantly mitigate opportunities for human error.

Disadvantages

To be clear, unit tests complement integration tests. They do not replace them. They also come with their own disadvantages:

There are very, very few tools for reliably unit testing Terraform

As you'll see in the ‘tools’ section below, there aren't very many out-of-the-box options available for doing this. Consequently, this means that you will most likely need to roll-your-own solution.

TDD is slower at first than just writing code

Many software developers are familiar with writing code first and testing later. Fewer, but an increasing amount, are more acquainted with writing failing tests first and making them pass with code. Making the transition can feel like a fruitless exercise, as one can spend a lot of time writing a working test (especially in the absence of a good testing framework) or writing a valid test.

The rub in this perceived lag is that the time spent on ensuring that your Terraform code works right from the start and works as it should is a savings in comparison to debugging complicated Terraform resource relationships later on. Instilling a test-first culture goes a long way in simplifying complex code in the long term.

Learning curve for first-time infrastructure developers can be high

If you're like me, you came into Infrastructure and the DevOps mindset as a systems administrator. Tools with high-level languages like Chef and Terraform have helped make this transition easier. However, given the lack of good Terraform unit test tooling, some underlying knowledge of a programming language is required. My solution uses a good amount of Ruby, for example. While mature infrastructure teams should optimize for having a strong programming background, this can be a little daunting to those that are just getting into this.

Tools (or lack thereof)

Unit testing Terraform is still in early days. Nevertheless, there are some options available for those looking to pursue this.

I use unit testing heavily for deploying my infrastructure. While it's still a work in progress, I'm aiming for Terraform code that can provision a VPC, a five node Kubernetes cluster (three masters, two workers) and a Route53 zone. This will ultimately be used to host my blog, personal website and tools that I've been long procrastinating on. I am using RSpec to do this and am aiming for a provider-dependent approach (i.e. something that you can use to test AWS resources just as well as OpenStack resources).

The area to focus on is the spec folder, namely on spec/spec_helper.rb. This parses my Terraform variables file (which, in my Jenkins pipeline defined by Jenkinsfile, is retrieved from S3 automatically depending on the environment being provisioned) and uses it to create a Terraform plan from a dummy state file that's written to disk. I use a dummy state file to ensure that Terraform always generates a complete plan on every test run. I figured that it makes little sense to test whether Terraform picks up changes, as accounting for this will complicate my tests and are made redundant by the testing done against Terraform itself.

If you've ever saved a tfplan onto disk, you know that parsing it can get complicated. The file itself is a binary file, so parsing it directly is off the table. While one can use terraform show to render a human-readable version of it, accounting for whitespace, multiple copies of resources, dependencies, and others makes this parsing tricky. The only tool that I could find that turned this into a common machine-readable format was tfjson from Palantir that, reliably, turns plans into JSON. (I tried to update its Terraform libraries to a more recent version but ran into a lot of problems. This is why my Rakefile downloads both a newer and older version of Terraform.)

If you have suggestions on how to make it better, please feel free to send me a pull request!

This tool, written by my colleague Emre Erkunt, is another good tool for performing unit tests against Terraform code. While this aims for an even higher level of testing abstraction called business driven development, it can be used to write unit tests against AWS resources as well.

A cleaner, AWS-specific implementation of my approach. This is another great drop-in solution to consider if you're not looking for a provider-agnostic solution.

In Conclusion: The Complete Deployment Pipeline

Using integration and unit testing to develop and iterate your infrastructure-as-code codebase enables you to create an environment deployment pipeline that looks something like this:

Figure 2: Illustration of a complete environment deployment pipeline.

Unit tests are executed locally during the first stage of the pipeline. Terraform makes it easy to see what it's going to go by way of the Terraform plan. We can use this to create unit tests that test the behavior individual modules and resources and establish a contract to which they must adhere. This can be enforced further by Git or SVN commit hooks that automatically perform unit tests when creating a pull request that merges into the master branch.

Once local unit tests pass, the next stage is to execute integration tests using serverspec or kitchen-terraform. While this can be done locally on the developer's workstation, we recommend this be done on a CI server upon invocation of a pull request to guarantee that the code submitted in a pull request is passing. The integration tests will use the Terraform code and any provisioner dependencies contained therein to create an exact replica of a live environment, run tests against live instances then tear it down and report their results.

An environment ‘release’ follows passing integration tests and merging the pull request into master. Because your Terraform configurations have already been tested locally and through CI, the ‘release’ is as straightforward as tagging the merging commit with a version number and using a deployment strategy to move inbound traffic away from the old environment and into the new environment. Depending on the application and its usage, this might involve re-pointing your DNS records and load balancers to reference the new environment version instead of the old environment version (also known as blue-green deployment) or redirecting traffic slowly from the old environment onto the new environment (also known as a canary deployment - with the new environment being the canary).

Following this approach will enable your team to update your Infrastructure more reliably, fall back to a known working version more quickly and iterate new features and developments with significantly more confidence.