Cloud Native Architecture and Development: What They Are and Why They Matter

Cloud-native is the biggest enabler in a generation.

Done well, it lets the developer simply develop. To focus solely on what really matters: creating software that your customer wants (loves!) to use.

Everything else they need just happens.

How so?

This nine thousand word guide to cloud-native architecture and development will tell you all you need to know about what cloud-native is and why it matters. There are three parts:

- Cloud-native architecture: what is it and why does it matter?

- Cloud-native development: how does the cloud change how you develop?

- Cloud-native principles and practices: 23 killer tips for how to go cloud-native

Let's get straight into it.

Cloud-Native Architecture: What It Is and Why It Matters

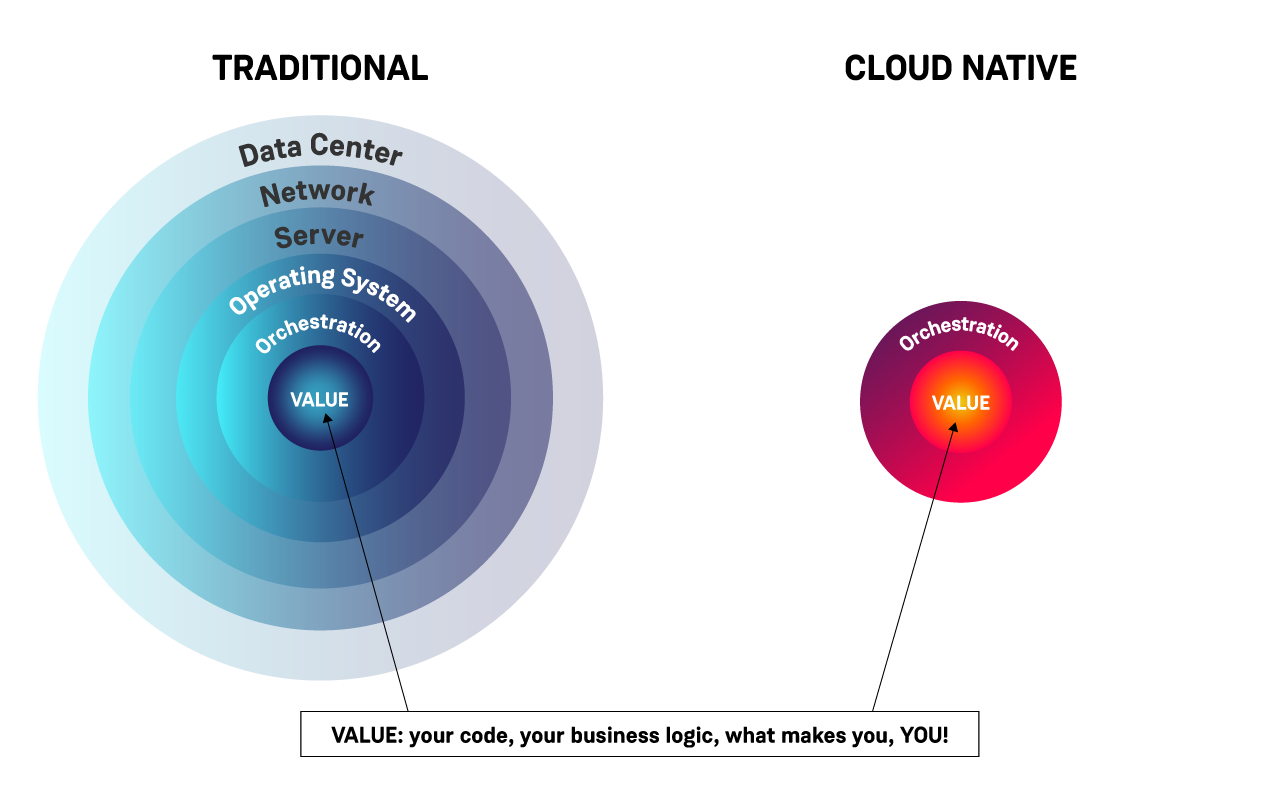

Cloud-native architecture fully takes advantage of the distributed, scalable, flexible nature of the public cloud to maximise your focus on writing code, creating business value and keeping customers happy.

Going cloud-native means abstracting away many layers of infrastructure—networks, servers, operating systems etc.—allowing them to be defined in code.

As much infrastructure as you need (servers, databases, operating systems, all of it!) can be spun up and down in seconds by running a quick script.

All the developer has to worry about is orchestrating all the infrastructure they need (via code) and the application code itself.

Why Is Cloud-Native Architecture Important?

Cloud native removes constraints, shortening the path to business value.

There is a seldom-spoken truth that bears being spoken: the only thing that really matters is the stuff that your customer is interacting with.

The less you have to worry about anything else beyond the code that gives life to your business, the better.

And that’s what cloud-native is about.

Let’s illustrate this with an example.

Let’s say that user feedback for your mobile banking app suggests that users really want a bill-splitting function.

In the old world, you might first start by wondering how you would deploy it, what networking you need, what you’re going to write it in...and slowly what really matters (THE CODE! THE CUSTOMER!) slides into the background as niche technical concerns cloud the horizon.

In the new world, a decent cloud-native setup removes the need for servers, operating systems, orchestration layers, networking...putting your code front and centre of everything that you do.

You (or your team) can try things out straight away. Test hypotheses. Experiment.

Maybe this kind of database is best for the bill-split feature? Or that one? What about incorporating a machine learning tool?

Any kind of ‘what if we did this…?’ question can be (almost) instantly tested in practice, giving you a data-driven answer that you can build on.

When you’re constrained by infrastructure, your ideas are on lock-down. When you want to test something out, you instantly hit a brick wall: hardware!

And whatever solution you come up with will always be a compromise based on the limitations of what you have in your data centre. But compromises don’t always win customers!

When the cloud removes those constraints, rather than focusing on technology you can focus on the customer experience. Think about products, not pipelines. Fix errors in your app, not your database replication.

And all of this means that you can get from idea to app in the quickest possible time.

In a cloud-native world, your ideas gather feedback, not dust!

But there are many benefits to cloud-native architecture beyond the simple ‘it lets you focus on your code’. And that’s what we turn to now.

The Benefits of Cloud-Native Architecture: Unlocking Your Ideas

Let me introduce Tara.

Tara is a teenage developer-in-training. Because she’s growing up in a cloud-native world, she will become an awesome dev without ever having to know how a server works.

When she learns what people had to go through before the advent of cloud-native to get their work done, she will be amazed.

It would be equivalent to looking back and wondering how the hell they managed to land on the moon when the in-flight computer could only be programmed with punch cards.

Tara is going to take us through how cloud-native architecture unlocks your ideas!

1. Removes Constraints to Rapid Innovation

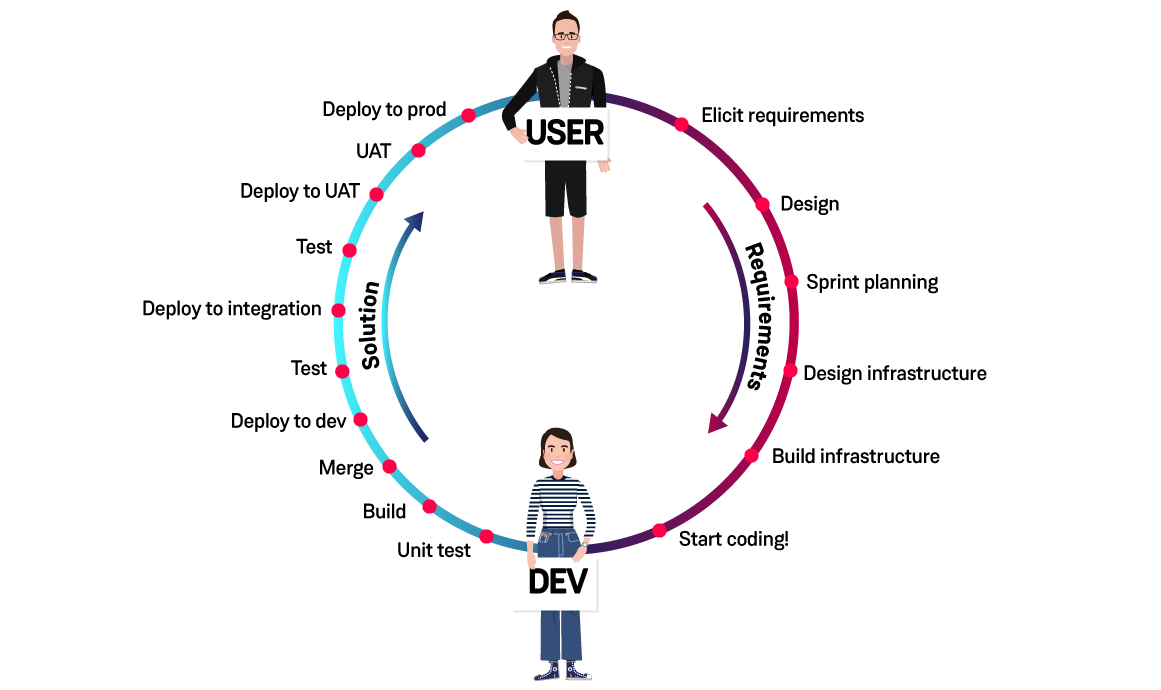

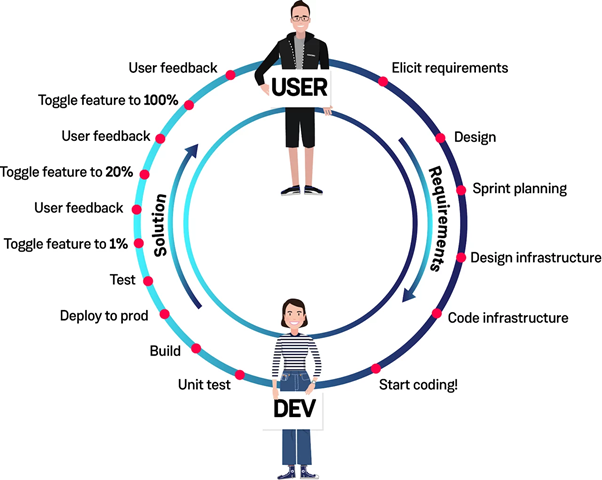

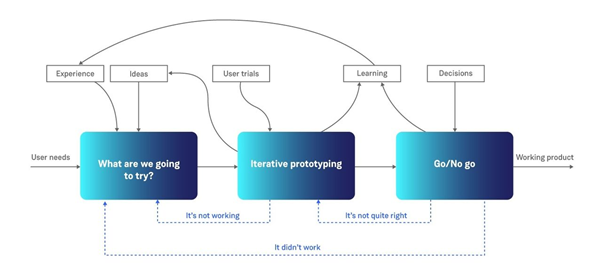

This is the circle of (dev) life. At the top is the user. At the bottom is Tara and her team.

The faster Tara can go round this circle—delivering features to users and getting their feedback—the faster ideas can be turned into customer-pleasing reality.

By removing time-consuming way stations from this circle, cloud-native allows Tara to whizz round much quicker than was previously possible.

There are other tricks as well. One example is traffic shifting: this allows you to ‘toggle’ (i.e. release) a given feature to a small percentage of users, gain feedback and then slowly expand access to more and more users.

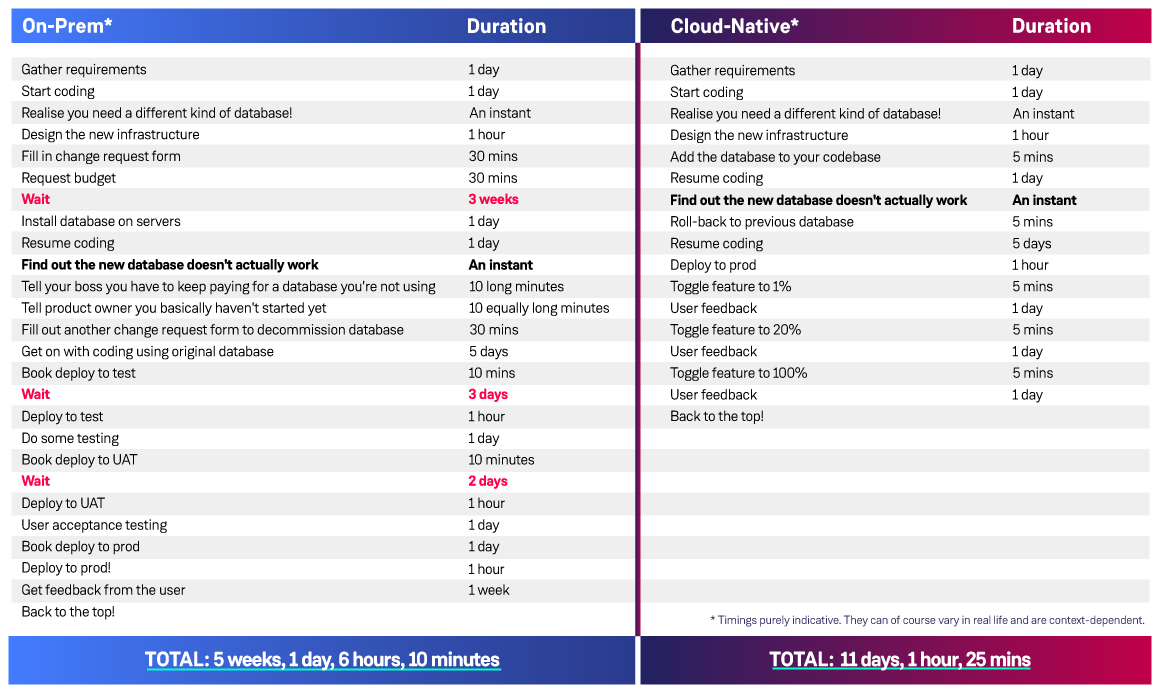

Remember our bill-splitting example from above? The difference in time-to-market between being able to test things instantly and having to wait to procure a new database (for example) REALLY adds up.

Here’s an indicative sketch of the different timescales. (And, yes, this is a bit contrived and not necessarily true everywhere, but it illustrates the point).

In this example, after a few days Tara and her team have deployed a prototype of the bill-splitting feature and are gathering feedback. In the old world, she would still have been waiting for a server!

Try to force Tara to wait three weeks for a new server to try out an idea and she won’t be very impressed. She might even go and build a competing challenger bank in the meantime.

2. Brings You Closer to the User

Often developers don’t understand what users really want.

And every process you put between users and developers is a place where the original vision can go astray.

The closer you can put your dev team to the customer, the better the product will be. As we’ve seen, cloud-native’s key benefit is just that: eliminating non-customer-focused activities.

This goes deeper, though! Because of the move towards rapid innovation, customers now expect rapid delivery of MVPs that they know are going to be improved over time. People don’t expect new apps to have a host of functionality and a bug-free experience straight away, but they do expect continuous improvements over time.

Your customers also want small, rapid releases. It doesn’t only belong to the realm of mystical Netflix engineers or the people who edit CIO.com.

This shift in customer expectations is probably permanent. What this means for you is that prototype is now as important as product.

And because cloud-native architecture let’s you roll out features to a small percentage of your users, you can test prototypes with actual customers in real-life situations. Not testers in a UAT environment!

This way, Tara can get irrefutable proof that users like (say) her new bill-splitting feature before she invests in developing the idea further (or ditching it).

3. Very Cheap and Easy Access to New Tools and Services

The barrier to entry for cloud-native infrastructure (cost- and effort-wise) is about as low as you can go.

Firstly, in the cloud you only pay for what you use and don’t have to buy anything upfront.

(In fact, some cloud providers will allow you to get started for free, by giving you access to some features for nothing. They have helpful pricing calculators, as well)

Secondly, these cloud providers are operating at insane economies of scale, which means you can access cutting-edge infrastructure and tooling at a fraction of what it would cost to deploy these in your own data centre.

Thirdly, once you have done the initial account setup, accessing any of the cloud providers’ massive range of infrastructure or tooling is approximately as complicated as ordering from a pizza from Just Eat (choose your ingredients => order => consume).

These advantages mean that you can try out new instance types, databases, tools, whatever you need with minimal cost, risk and effort.

Exactly what you need for rapid innovation and experimentation.

If Tara wants to see if database X or Y works best...she is free to just try them! Or if she wants to test a quick script? Whack it on AWS Lambda for five minutes and see. What about seeing what value machine learning could add? As soon as she has the idea she can spin up AWS Sagemaker and play around.

Try to convince Tara that she needs to go through a lengthy procurement process to buy a whole range of hardware and tooling that her team now has to manage forever...just to test out an idea?

Hah.

More Benefits of Cloud-Native Architecture: Transparency, Security and Event-Based Fun in the Cloud

A major difference between the cloud and on-premises is that in the cloud—by default—everything that happens or changes is ‘stored’ as events that are transparently logged.

You know exactly what went on (or is going on), when and where.

This has some interesting consequences!

1. Event-Based Fun Enables Automated Workflows

The cloud is event-based by default.

And this lets you set up awesomeness-inducing if-this-then-that workflows. Like when you search for “Cloud-Native for Dummies” on Amazon, this instantly triggers not only the search but also an update to your recommendations, your search history, the ads you see and so on. This is all event-based magic.

The clincher is this: all the events can be globally available!

So if a team posts an event (e.g. someone searches for something) then other people in other teams can start using those events as triggers for features in their own domain. So one event can trigger multiple reactions, each built by different teams across the business for their own purposes. All entirely independently of each other.

Much wizardry.

To do this on-prem, Tara would need something akin to three different Kafka instances and a ton of finicky infrastructure.

In cloud-native land Tara just says: when event X happens, do Y. She doesn’t have to worry about anything else.

2. Transparency and Observability Allows for Rapid Problem Solving

A server running on-prem is a black box.

Cloud-native tech, by contrast, automatically logs what’s going on and gives you nice metrics, colourful dashboards and automated notifications.

So Tara can create a serverless function, run it and be alerted via Slack or SMS if something goes wrong.

And this is just out of the box!

This opens up epic possibilities.

If you write crappy code (no amount of tech can save you from that…) it can alert you to problems and fix them before you realise they were there.

If you’re a bank and your customers can’t transfer money, the dev team will know there is a problem before the call centre gets overwhelmed with angry messages. Your deployment can be released to just a handful of users to transparently test it and automatically roll-back if things go awry, only affecting a couple of customers.

It can even help you take a data-driven or data science approach to business decisions. Say 50% of customers going through Tara’s e-commerce checkout don’t actually complete the transaction. If you suspect that (say) the position of the button is causing a drop-off you can easily do a split test, log how many people check-out and work out the best solution.

This is HUGE from a business perspective. And it’s so simple from a technical perspective. (So long as you go cloud-native).

3. You Can Automate Your Security Standards to Remove the Biggest Barrier to Rapid Innovation

Without security expertise, with on-prem you could have a massive attack surface with loads of open ports and firewalls and such. And in my experience, no one would even know which ports were open because the spreadsheet tracking it hadn’t been updated in four months.

In the cloud, it is easier to make the attack surface much, much lower. If you create a container or a serverless function, for example, nobody has access to it—unless you allow them to. The declarative nature of access management, roles, and policies prevent unauthorized individuals from poking around where they don’t belong. There is less security expertise needed as your cloud provider takes care of a good chunk of it.

And your security standards can be very easily defined and replicated across your entire infrastructure. It’s even very easy for others to understand how security has been defined.

This means that security configurations can then be automatically deployed, tested, monitored and reported on across your entire IT estate. Not to be sniffed at! If any of your teams try to deploy something which breaks those rules, their pipelines can identify it and will stop before any damage is done.

By making security standards easy to consume (for humans as well as machines) you instantly remove the biggest constraint to rapid, effective security (reading the 97 page security manual) and make it instantly scalable by translating it into automated scripts.

It also becomes less of a mythical dark art that only a few people in the company understand. Instead, everyone is aware of how it works, which breaks down silos of responsibility.

After all, it doesn’t matter how fast Tara can code if she doesn’t have a means of guaranteeing security just as quickly!

There’s tons to say here. Check out the biggest benefits of compliance-as-code as well as how to shift security left in your pipeline.

The Ultimate Goal of Cloud-Native Architecture: Rapid, Data-Driven Experimentation

Ultimately, the end goal you’re looking for is this:

Whenever someone says “what if....THIS?”

You can confidently lunge forward and reply: let’s try it!

Development becomes a science: you hypothesise, test, analyse the data and conclude the next course of action.

Did sales go down? Leave the button where it was.

Did sales go up? Move the button for everyone!

Tara can have an idea in the morning and it will have lunged its way to production by that afternoon—all without compromising on quality, security, or performance.

Rather than having to work out a business plan, including how much hardware she needs and how much it’s going to cost.

But...it’s not that simple is it?

OK. This all sounds great on paper. But we know it’s not so simple to turn the oil tanker around in real life.

We get it.

But we have jumped into massive organisations before and kickstarted cloud-native projects in only a few weeks.

In only five weeks we helped Green Flag to deliver a serverless cloud-native platform to break away from their legacy technology and build the foundation for a modern rescue service.

New features can now go from dev to prod in under 30 minutes using infrastructure that is much more cost-effective.

We helped Direct Line Group to create a serverless insurance start-up that utilises machine learning to treat customers on an individual basis. In DLG’s own words it is “built for tomorrow”: i.e. built around experimenting so they can take steer from what their customers want and adapt accordingly.

But what do you do once you have your architecture in place? You still have to write your code. Let’s turn to how we develop software in a cloud-native environment.

Cloud-Native Software Development: What It Is and Why It Matters

Cloud-native software development is about creating software in a way that maximises the game-changing capabilities of the public cloud.

Having the power of a massively scalable, flexible, distributed platform with a huge amount of on-demand tools and services inevitably changes how you develop software.

How the Cloud Impacts How You Develop

Let’s rejoin our keen young developer, Tara. This time she also has a friend, Dan, who heads up the Ops team.

Together, they’re going to take us through the three ways they are impacted by the cloud.

- The Cloud Turns Your Ops Team into an API

- Deploying to Production Becomes Routine

- You Can Deploy Small, Fast and Often. With No Fear.

Let’s jump in.

1. The Cloud Turns Your Ops Team into an API

In the cloud, infrastructure of all kinds can be defined as code and be deployed by running a quick script.

Effectively, the cloud turns your ops team into an API.

In the cloud-native world, Tara has turned Dan into code.

(Don’t worry, Dan learns some cloud and rejoins Tara later in the tale…!)

This frees Tara from all kinds of constraints (waiting for servers, negotiating with procurement teams, being blocked by environment logjams etc.).

All she has to worry about is her code.

Everything else just...happens.

The consequence? The flow of software is massively accelerated through the organisation.

Development becomes much faster.

2. Deploying to Production Becomes Routine

But even if you can develop quickly, what about the problem of deploying to production?

Deployments used to be so risky that the threat to a dev’s weekend plans of deploying on a Friday became meme-worthy.

In this code-defined world, however, deployments are reduced to nothing more than an API call.

The fact that deployment (and automated roll-backs!) are so easy instantly lowers the danger of running code in production to almost zero.

It makes running code in production totally routine.

Like brushing your teeth. It’s just something you do without thinking about it.

And this massive de-risking of the whole deployment/roll-back hullabaloo removes the biggest obstacle that stood between Tara and her user: FEAR!

3. You Can Deploy Small, Fast and Often. With No Fear.

Deployments used to be a lot of effort. Because of the risk they would be done at night (when traffic was low) and would often go wrong, leading to outages that Tara would have to spend all evening fixing.

No surprise then that, when deployments were risky and painful, Tara would try to smash as much code as possible into as few deployments as possible.

This is seriously stressful.

It also puts a lot of pressure on the code you release being what the customer wants!

(You don’t want to bust a spleen deploying a new feature that your user doesn’t actually give a hoot about!)

But when the flow of code is freed from constraints...suddenly the whole problem dissolves!

You can release as small, fast and often as you like. No fear.

Tiny chunks of code can whizz through the whole development lifecycle insanely rapidly.

But developing and deploying fast is no use if you don’t know that what you’re doing is the right thing!

Why Cloud-Native Development Matters

In the cloud-native world, Tara can dev fast, get tools quick, deploy on-demand and fix issues on the fly.

These huge quantitative changes in the size, sophistication, safety and frequency of code releases has a huge qualitative impact on how you develop software.

This is analogous to the history of writing.

The invention of ink, the printing press, the typewriter, the word processor, the internet, Medium, etc. enabled revolutions in the quantity of writing produced that had huge qualitative impacts on how people relate to writing.

Over time, trying ideas out became cheaper and easier. You can work much more freely with your ideas on a Google Doc than you can with a quill and inkpot!

The changes that have occurred in the world of development are similar. Let’s let Tara explain the five main ways:

- Respond to change

- Adopt an experimental approach

- Bring agile to the core

- Exploit cloud-native architecture for multi-speed development

- Go from idea to app in the quickest time possible

1. Respond to Change

When Tara, firstly, can deploy stupidly fast at low risk and, secondly, is backed by an incredibly rich cloud-native tech stack a very cool thing happens.

She discovers that she suddenly has confidence in her ability to respond to change.

Dan used to hate change. It could only mean bad news. He always used to push back when Tara wanted to deploy something.

Since then, Dan has learned how to automate deployments in the cloud! Now, he can deploy a new feature to production at the push of a button. If it fails, he rolls it back just as easily.

If, after deployment, Tara decides that maybe another type of database would be more suitable, she can just get Dan to roll that out. Or if users dislike a new feature, she can just ditch it before investing any more time and money.

No stress.

You see what happened there? All of a sudden Tara doesn't need to worry about getting everything right upfront.

She can respond to change without fear. She can move with your customers’ needs and follow them more closely than ever before!

2. Adopt an Experimental Approach

When change isn’t scary, you can try things out. Experiment.

Imagine that Tara is told that her software needs a password-less login feature.

In the old world she goes away, designs the whole thing, buys new hardware to build and run it on, gets her team working on it and voila: three months later she unveils the new feature.

What if it turns out users don’t want it? Or management changed their mind?

In the cloud-native dev world, you can rapidly build a minimum viable product, complete with in-built metrics, to measure whether or not users actually use the feature.

Then, based on genuine user feedback, Tara can make a decision on whether it's worth putting more dev time into the project or not.

The key point is this: Tara can invalidate ideas using data-driven feedback in DAYS. Not months.

This won’t necessarily bring joy to your product owners but it will bring value to your company!

3. She Can Bring Agile to the Core

The cloud brings the principles of agile to the core.

You could even say that the cloud is the technological manifestation of agile principles.

It puts the power back in Tara’s hands!

No longer is there a council of architects that dictate the kinds of resources that Tara can use and how they are deployed.

Infrastructure is now part of the circle of development (see below), rather than being the platform that the circle was built on.

This means that a major bottleneck to ‘real agile’—infrastructure—can now be managed in an agile fashion. Emergent architecture, i.e. evolving your architecture one sprint at a time, becomes a reality!

Every station on the circle of development can then be managed on a sprint-by-sprint basis.

Agile becomes real!

4) Exploit cloud-native architecture for multi-speed development

The cloud lends itself naturally to both microservices and event-driven architectures.

With microservices, apps are built as a collection of services, which pairs up perfectly with the distributed nature of the cloud. Each service can be hosted individually and treated as its own isolated ‘unit’ without needing to touch the rest of the application.

So, Tara can make changes to (say) her payment system independently of her personalisation system.

The situation is similar with event-based fun.

Everything that happens in the cloud is (by default) recorded as an ‘event’ that can be ‘consumed’ by other teams/services for their own purposes.

For example, when you do a Google search, that appears within the Google ecosystem as an event that can be exploited (in principle) by other Google teams for use in their own domain.

So the Google Ads team could use it to determine what ads you see, independent of the Google Search team.

The key point is this: both microservices and events foster independence between teams.

The result?

Now all of Tara’s product teams can innovate at different speeds and can independently find the maximum rate of innovation for their area.

The natural cadence of innovation can emerge organically. To add or trial new features you need only subscribe to an already-existing event—and all you need to know is how to consume it. This makes you entirely decoupled and independent from other teams (except for the type and structure of the events they produce).

Whilst Tara could still do this in an on-prem environment, it would take a significant amount of time, effort and resource to build this event-based system (not to mention the amount of maintenance required to keep it operational). In the cloud it’s readily available for you at much less cost than rolling your own and it’s all managed by the cloud provider.

5) Go From Idea to App in the Quickest Time Possible

With cloud-native approaches you can respond to change, experiment, be super-agile, and take advantage of the latest technologies to get from idea to app in the quickest time possible.

All of which frees Tara to hone in with almost obsessive focus on...the customer!

23 KILLER Cloud-Native Development Principles and Practices

What are the best ways of being cloud-native in practice?

It’s time to look at some really powerful principles and practices to bring cloud-native to life.

So let’s jump straight in. In no particular order, here are 23 of my top cloud-native principles and practices!

- View Software as a Service You Are Delivering to the Customer

- Use Feature Toggles/Flags to Open New Possibilities

- Canary Releases Keep Production Safe

- Ensure Your Release Pipeline Is Quicker Than a Greased Badger

- Use Trunk-Based Development for Max Deployment Frequency...If You Dare

- Tiny Commits Help You Go Faster

- Only Have a Production Environment

- Use Continuous/Synthetic Testing to Test New Features

- Test in Production to Massively Increase Confidence While Moving Fast

- Microservices and Containers / Functions and Serverless: The Cloud-Native Architectures of Choice

- Stateless Microservices Accelerate Change

- Observability (Monitoring, Tracing, Logging etc.) Lets You Know What’s Going On Everywhere

- Distributed Tracing and Logging Track Problems Through Your Entire Tech Stack

- Cross-Functional Teams Ensure End-to-End Accountability

- Choreography Makes for Resilient Event-Driven Architecture

- Code for Resilience!

- Code for Longevity!

- You’re Only ‘Done’ When It’s In the User’s Hands!

- Practice Breaking Things on Purpose

- Ensure You Have an Exquisite Requirements Elicitation Process

- Don’t Fear Failure; Or, Develop Effective Innovation Cycles

- Look After Your Team!

- Take Your Time!

The Killer Cloud-Native Principles and Practices!

#1 View Software as a Service You Are Delivering to a Customer

This is the big one.

Software is a means to an end. The end is the service to the customer.

It’s a shame that ‘SaaS’ has already been coined, otherwise it would have been the perfect synonym for ‘cloud-native’.

Every team should be using the power of the cloud to deliver the best possible service to the customer. It’s not about vanity tech projects or cool languages.

If it doesn’t serve the customer, ditch it!

The rest flows from there.

#2 Use Feature Toggles/Flags to Open New Possibilities

Feature toggles (sometimes called feature flags) are central to many of the cloud-native principles.

They are a way of changing the behaviour of your software without changing your code. You can turn certain features of your application on and off easily, deploying code but keeping certain features hidden from users until they are ready.

This means that deploying to production is no longer synonymous with releasing software to users.

So you can commit to prod without worrying that you’ll break something or provide a poor service.

With feature flags, you can deploy a newly developed feature straight into production! You can do all the testing and tweaking you need to do there.

This means you don’t have to keep promoting a feature through all the different environments (nor host those additional environments).

All the time you save gets contributed towards faster delivery.

It also means that you can build in the option for certain features and then build them up - in production. This helps you to dev at the pace of design!

(Assuming your pipeline is lightning-fast...see #4!)

#3 Canary Releases Keep Production Safe

A related technique is to use feature toggles to perform canary releases: turning on a specific feature only for a small percentage (or specific subset) of your user-base.

The canary in the mineshaft!

This limits the blast radius of any new feature and lets you easily roll back if something isn’t quite right.

Cloud-native tools like AWS API Gateway or Lambda have this feature straight out of the box.

So if you release a new version of a function, AWS will deploy it and slowly route traffic through from the old to the new function. It will increase the number of requests until it either reaches 100% of traffic or you get an alert (i.e. an error or slow response time).

So if you accidentally code something that increases response time...you would release to (say) 5% of users before the response time hits (say) 250ms at which point the alarm is raised, traffic is routed back to the original function and the new one is torn down.

And the user doesn’t notice anything is off!

All while you’re off making a cup of tea.

This is a critical advantage that allows you to try things out at speed while protecting your user base from failure.

#4 Ensure Your Release Pipeline Is Quicker Than a Greased Badger

Slow pipelines are death by a thousand cuts.

You push code, then wait five or ten minutes for it to build. Then you wait three minutes for it to deploy…then wait for this and that...it all adds up!

When it takes even a little while to push, you’ll end up bundling up the day’s code into one commit at the end of the day to avoid the bother.

And whatever slight barrier comes between you and deployment makes it that much more unlikely that you will do small commits (see #6).

And bigger, less frequent commits are a danger to the kind of rapid, experimental development that is the aim of cloud-native ways of working.

But how do you accelerate your pipeline?

The best ways are to break your code up into microservices or functions (see #10).

When your codebase is smaller and more distributed it means that build and test cycles are much faster.

Note that this will not work if you have a QA team at the end of your pipeline wanting to see everything before pushing to prod. This is the ultimate bottleneck!

If you have human processes (other than manual approval) then everything is slowed down. So you’ll need some additional hacks to automate as much as possible:

- CI/CD: automating the integration and delivery of code

- Automated testing: tests should be codified as much as possible into the pipeline (see #9)

- Static Code Analysis: automatically checking source code structure before it is compiled

- Compliance as code: bake your security and compliance requirements into your code

- Good application architecture: use microservices, functions, event-based (see #10)

- Observability: track key metrics so you can see how quick your pipelines actually are (no guesstimating!) and improve that over time (see #12)

- Feature toggles: then you can put your QA into prod! (see #2)

A super fast pipeline is the foundation for your cloud-native SDLC. Without it all the practices and principles lose their magic!

#5 Use Trunk-Based Development for Max Deployment Frequency...If You Dare

The above principles, taken to their logical extreme result, in trunk-based development.

This sees even the smallest of changes automatically reviewed, tested and committed on the trunk. This is possible when you have peer programming practices in place, you fully trust your developers and your pipelines are good enough to be relied upon as the peer review of everyone’s code.

Most of the time companies have a peer review system. A dev creates a short-lived ‘branch’, creates a pull request, makes their changes and then gets someone to review before merging back into master (or the ‘trunk’).

With trunk-based development, there is only the trunk! No branches. Everything gets pushed straight onto the trunk, with machines automatically checking the code. Canary releases (see #2) are used to stop bugs being deployed.

This is about the most extreme—but also the most impactful—way of increasing your deployment frequency.

But it depends on your risk appetite. There are many ways to put security at risk beyond just bad code!

Peer review can prevent more ‘human’ errors and (heaven forfend) maliciousness. Ranging from spelling mistakes on one end to wilful sabotage at the other (i.e. code that rounds up financial transactions and sends the difference to an offshore bank account!).

Caveat emptor!

#6 Tiny Commits Help You Go Faster

All other things being equal, the smaller your commits, the faster you can go (and the faster you can home in on what your customer wants!).

(Assuming you have a greased-badger pipeline! See #4).

The more commits you perform, the faster you get features to your users and the faster you find out about any issues: either something that doesn’t meet user requirements (see #19) or something that isn’t working properly.

Because you’re committing frequently it’s easier to understand where an issue is.

Commit 6. No problem.

Commit 7. No problem.

Commit 8. Problem!

You know where to look.

And the more stuff there is in a commit, the harder it is to find the issue.

This way it’s also much quicker to deploy updates and fixes. And these small increases in speed add up insanely over time, resulting in massively superior end products.

#7 Only Have a Production Environment

If you reaaaaallly want to go all-in on the cloud-native: have only one environment.

People tend to build a lot of environments before production (dev, test, UAT, pre-prod etc.). But all the stuff before prod is only a de-risking device.

The only reason you have them is so that you can push changes that don’t affect production and that only your devs/testers can see.

The problem is that it slows down your pipeline. You get stuck in queues and have to repeatedly deploy.

Having the option of feature toggles and canary releases changes how you think about environments. You can push code straight to prod and achieve the same goal: only allowing your devs to see it.

Canary release = doesn’t affect production

Feature flag = only the chosen few can see

This means you can use tiny commits (see #6) to release and test tiny increments. So you might push a Lambda function live. Test it. All good. Then an API gateway that exposes that function. Then I put a feature flag on API Gateway so only people on our intranet can see it...and so on…!

Ultimately, the only thing that matters is how software behaves once it’s in production.

Got performance tests doing well on the test environment? Who cares? I want feedback about how it works in production.

So why not release to production directly?

(See also #9 Testing in Production!)

#8 Use Continuous/Synthetic Testing to Test New Features

Continuous testing (sometimes known as ‘synthetic testing) is the tactic of sending ‘synthetic’ (i.e. fake!) traffic to a system to test features.

By combining this with feature flags, synthetic data can be generated to monitor what would happen should a new feature be set to live. The UI behaves ‘as if’ the feature had been released, but real users are none the wiser.

Say you wanted to release a new chat feature. It passes all tests but the developer doesn’t know what the effect of releasing the feature will have on the back-end. By setting a feature to ‘synthetic’, the dev can see exactly what would happen if it were pushed live.

You can use synthetic traffic as your initial canary audience, so no real user ever gets errors.

This gives you a nice confidence-booster when releasing new features. By viewing telemetry and log data during a synthetic trial a dev can make a data-driven decision about whether or not to fully release a new feature.

Critically, this can all be done in production (see #7: Only Production), but you can also try it out now, in your other environments. Create synthetic traffic on a UAT environment and then trial some of these ideas to see if feature flags and canary releases prevent errors and bugs. Get better at lowering them on UAT and then move to doing it in production.

#9 Test in Production to Massively Increase Confidence While Moving Fast

The ability to test in production is critical to cloud-native development.

I always say: “A test in production is worth ten in UAT!”

Why? Even being fully confident that your software works in UAT still carries the worry that when you get to production something might go wrong.

But if it works for you in production, then that’s the best possible indication that it will work for your users.

Most people don’t do enough testing because it’s seen as a barrier to speed that gets in the way of deadlines. But while testing may reduce lead times in the short term, it gives you much greater confidence when you finally get to production.

This is where testing in production itself comes in. It gives you the best of both worlds: speed AND confidence.

How do you do that? Automation.

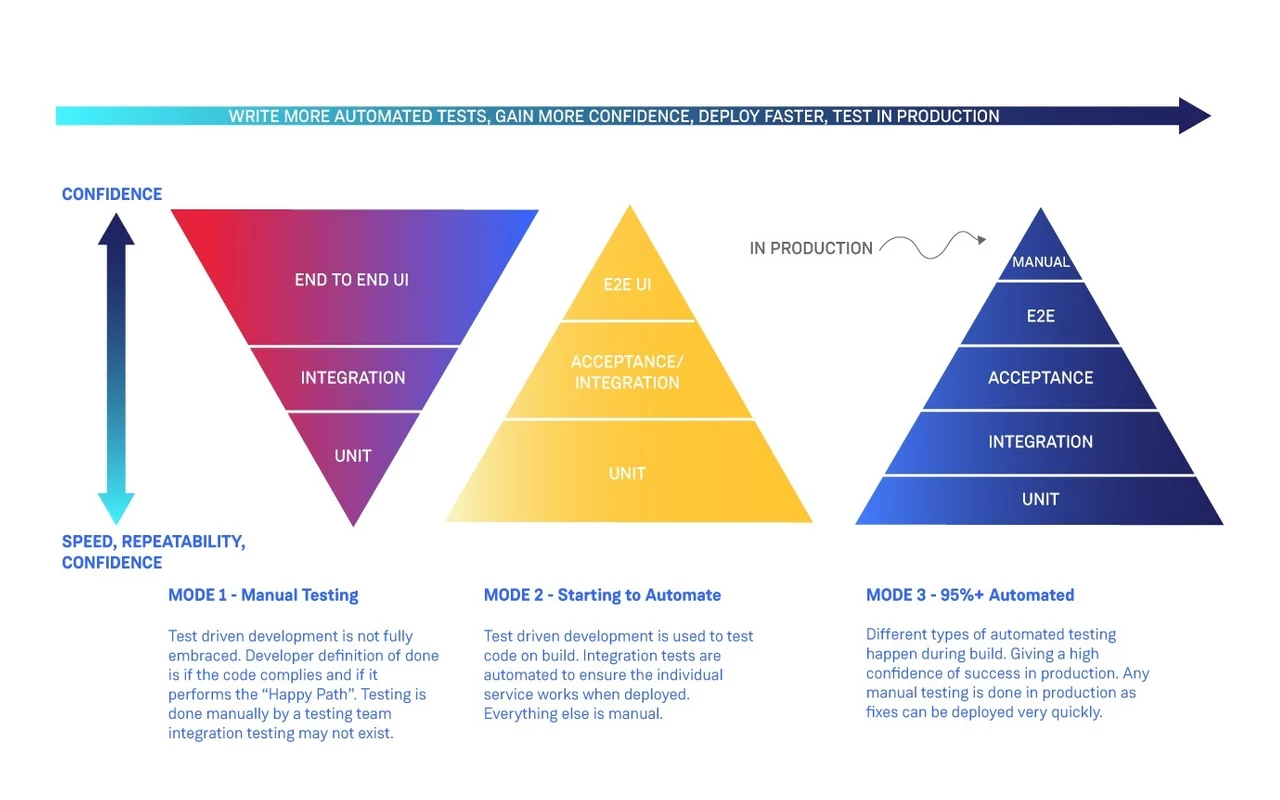

To demonstrate, let’s look at the testing pyramid.

As you can see, as you write more automated tests, you can balance the different types of testing better.

Most people I see are in mode 1: manual testing. This is slow, mainly consisting of end-to-end UI testing and normally includes regression tests for each change (imagine doing that when committing every hour!?).

When you get to mode 3, you have a nice balance of different kinds of test that yield the highest confidence that your software will work as intended.

And at the point where 95% of your tests are automated, you can start testing in production.

You do this by using feature flags. This means you don’t have to wait for the final approval before pushing to prod. You can START in prod, running all your (automated and well-balanced!) unit/integration tests while the feature remains hidden from users.

Note: you will always have some humans at the top of your pyramid. There are always certain things that computers can’t pick up like spelling mistakes. But that manual testing can also be done in production, where fixes can be deployed quickly (and because you’re already in production, you don’t need to move from test to prod. You’re already there!).

Containerised microservices and serverless functions are two architectural approaches that make it as straightforward as possible to enable all of the above principles.

Microservices and containers

Microservices are an architectural approach to application development where each feature is built as a standalone service and integrated together.

With microservices, apps are built as a collection of services, which pairs up perfectly with the distributed nature of the cloud. Each service can be hosted individually and treated as its own isolated ‘unit’ without needing to touch the rest of the application.

The benefit of this approach is that it becomes possible to build, test, and deploy individual services without impacting other services.

Even though a microservices architecture is more complex, especially at the start, it brings much-needed speed, agility, reliability, and scalability,

But managing your app as distinct microservices has implications for your infrastructure.

Every service needs to be a self-contained unit. Services need their own allotment of resources for computing, memory, and networking. However, both from a cost and management standpoint, it’s not feasible to 10x or 100x the number of VMs to host each service of your app as you move to the cloud.

This is where containers come in. They are extremely lightweight, and provide the right amount of isolation to make a great alternative to VMs for packaging microservices, enabling all the benefits they offer.

Functions and Serverless

Serverless is a computing model in which the cloud provider dynamically manages the allocation and provisioning of servers.

You only have to look after your code! Everything else happens invisibly and rather magically.

Serverless began mostly as function-as-a-service but has since expanded outside of functions to things like databases and machine learning.

It’s the “most cloud-native” that you can get because the back-end is entirely invisible and automated. It represents the lowest possible operational overhead currently available.

Serverless computing is a key enabler for microservices-based or containerised applications. It makes infrastructure event-driven, completely controlled by the needs of each service that make up an application.

The main benefit is that it allows developers to focus entirely on delivering value and enables them to release that value at pace via small, rapid deployments. Plus, the high degree of abstraction also allows you to completely break free from legacy architecture and processes that may have been preventing you from releasing at speed.

If you can jump right in with serverless, it can be a great option to kickstart your digital innovation. But don’t discount containers, which are very powerful and can still be very usable. And bear in mind that serverless and containers can be combined; it doesn’t have to be one or the other.

#11 Stateless Microservices Accelerate Change

With microservices, apps are built as a collection of individual services, that are each treated as their own isolated ‘unit’ without needing to touch the rest of the application.

The benefit of this approach is that it becomes possible to build, test, and deploy individual services without impacting other services. Therefore massively accelerating the development lifecycle, allowing you to make many small changes at speed rather than applying big, slow changes (see #6 [tiny commits]).

BUT: If your microservices have state and they fail that state will be lost. This will mess up your system.

If they are stateless they can fail you can launch them again and they will pick up exactly where they left off.

So if your stateless microservice is processing data from a database and it dies, then it will start up again and resume where it left off. Not so with a stateful microservice.

This makes your microservices more similar to functions/serverless. When a function is dormant they don’t exist! And when they start up they know nothing of the world. Which means there’s less stuff that can stop them working as intended.

Statelessness also brings confidence. You know when things go wrong in your house and you’re told to turn it off and back on again? That’s clearing state and starting from scratch again. If your microservice is stateless it always starts from scratch and because of that you know that it will run the same way every time.

#12 Observability (Monitoring, Tracing, Logging etc.) Lets You Know What’s Going On Everywhere

Cloud platforms offer an ever-rising tide of operability offerings.

There is a huge amount of out-of-the-box tools and services for monitoring, logging, tracing and so on along with in-built metrics for things like response times and failure rates.

This makes high-quality operability—knowing what’s going on, everywhere, all the time—the default!

Observability has two main benefits:

You know when something has gone wrong!

Something’s broken? You get an instant Slack/SMS/email.

Direct improvements over time.

You can’t improve what you can’t measure.

Do you know how long your CI/CD takes? We know we need a fast pipeline (see #4). With good observability you’ll be able to identify the ‘key constraint’ and get stuck in to improve it.

Don’t forget about your users in all of this. Make sure users are incentivised to give feedback.

#13 Distributed Tracing and Logging Track Problems Through Your Entire Tech Stack

The cloud is a distributed event-based system.

Distributed = the different parts are not in the same place

Event-based = every change to the system is recorded as an ‘event’ that can be consumed by other services/teams.

This has many advantages, but demands high visibility. In a monolithic application you can see what’s going on because it’s all in one place. In the cloud you need to know where all these distributed events are going!

You need to understand the consequences of something happening over here on something happening over there.

So one part of a system might make an API call that follows a path of different event-driven happenings before eventually resulting in an email being sent. If there is an issue with the email you need to be able to trace back the path of events to see where the error comes from.

For that you need distributed tracing and logging: i.e. understanding the path of events as they propagate through your system.

On one cloud adoption project at a large energy company, I set up a system so that every request had a unique request ID that could be tracked through the whole tech stack.

If something failed somewhere I would be instantly notified via Slack with the request ID along with time-stamped logs. I could see everything that had happened in relation to that request ID throughout the system...without having to do anything!

I could then instantly see where the error was. It would then take only a few minutes to deploy the fix and voila: prod is working again!

Bonus Tip:

A massive tip to make the most of this is to make sure you have really good error messages.

I always say: an error message is like a gift to your future self.

A good one will not only tell you that something is wrong but why. For that reason it’s critical to log the context around the failure: the time and date, what decision preceded it, what data did I send back, etc.

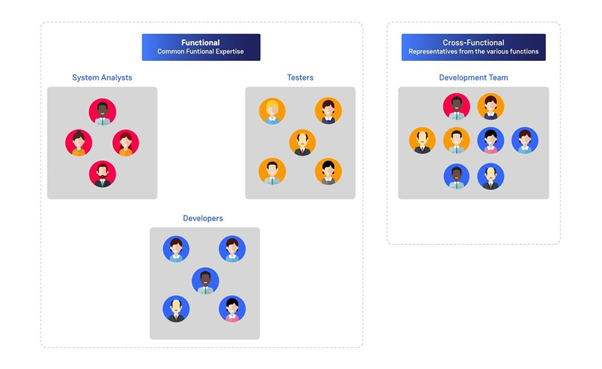

#14 Cross-Functional Teams Ensure End-to-End Accountability

A cross-functional team is a group that features whatever expertise is needed from across the business to ensure end-to-end responsibility for a given feature or product.

So you’ll have a team at (say) a company that hosts a music-streaming service that is responsible for the recommendation feature. They’ll have a representative from UI, back-end, API design, BA, security etc. Everyone is - together - fully responsible for getting that feature to production.

This team structure massively enhances communication and collaboration while ensuring accountability from start to finish. The rapid productivity that results is really useful for cloud-native ways of working.

It stands in distinction to the siloed teams that have traditionally been the norm: developers, operations, security, business and so on each working in comparative isolation. The difficulty in effectively handing over work from silo to silo (and the abandonment of accountability) is a major cause of delays.

But how to get from siloed to cross-functional teams?

You can’t just take all your dev teams, slam them into the ops teams and go “now you’re cross-functional”.

You will destroy many lives.

The best way is to create one team of superstars that makes all the mistakes. They can learn everything: how to architect your systems, how to manage security etc.

You can use this first team as mentors that you seed into other teams to spread cross-functional ways of working. Individuals from the first team become leaders in the second, third, fourth teams.

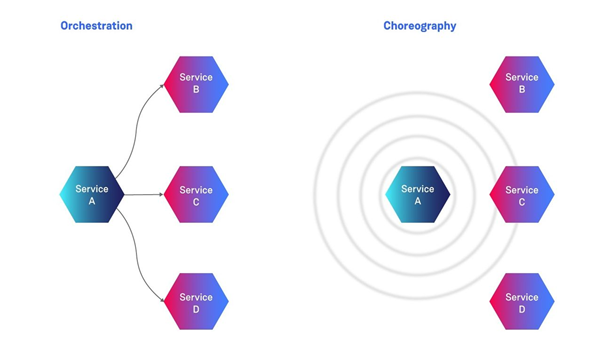

#15 Choreography Makes for Resilient Event-Driven Architecture

Most systems are programmed as follows: when A happens, do B.

This is known as ‘orchestration’. It’s a common part of cloud-native architectures.

In this context, A is in charge of what comes next, and so A must be aware of B (and also of C, D, E etc.). So you have to constantly update A on the state of B, C, D etc.

Choreography, in contrast, gives B the power to decide when it does anything. Because of this you can add C, D, E, F all the way to Z without having to change A.

This means that A is always going to work, because you’re not changing it from it’s current working state.

It also means you can try C or D and see if it works, if it doesn’t you can then easily tear them down and move on to E.

This choreography is done using an event-based mechanism, it is used to distribute your system into microservices and functions by creating a system “heartbeat”. Things can hang off of that heartbeat and perform actions should they need to. The event system allows them to go down for periods (maybe for a release or bug fix) and when they come back online they can process events from where they left off.

Because they are so independent it means that they can be deployed in their own cycles, not waiting for other services to be released and having to sync up deployment times (one of the worst characteristics of a distributed monolith).

#16 Code for Resilience!

Coding for resilience means coding in a way that expects and anticipates failure.

In practice this looks something like this: if microservice A relies on microservice B and B goes down...A will not fail.

For example, ‘likes’ on Facebook are coded for resilience via an ‘optimistic user interface’. This means that when you ‘like’ a photo on Facebook, it will be registered on the front-end (by giving the little ‘thumbs up’) before it goes through to the back-end.

This means that even if the ‘like’ fails to register on the back-end, the system can keep trying until it goes through.

Critically, for the user it appears that the ‘like’ went through instantly regardless of whatever shenanigans need to go on in the back-end to eventually register the ‘like’.

The user’s experience is uninterrupted, despite a failure in the system.

And, ultimately, that’s all that matters: how your user feels when using your product. They want things to flow. So that engagement is maintained.

The frustration with systems is when users press a button and nothing happens!

#17 Code for Longevity!

Look at some code that you wrote five months ago.

If your immediate reaction is “Who the hell wrote this and what were they smoking?!”...you didn’t code for longevity.

There is a massive difference in your ability to understand the nuances of your code between when you’re in the thick of it and when you’re looking at it in a fresh state.

Coding for longevity means coding so that anybody can go back and understand it. This ensures that your code will sustain its usefulness even as personnel and systems change over time.

Peer reviews are an excellent way of ensuring that your code is legible.

#18 You’re Only ‘Done’ When It’s In the User’s Hands!

The definition of ‘done’, for almost everything, is when it is deployed and released to everyone in production.

Don’t settle for anything less!

Don’t spend forever pushing code to trunk that doesn’t have a prod environment with at least synthetic traffic running through it.

Even if your product isn’t released yet, you can have a prod environment with life-like traffic running through it.

The moment you decide that prod isn’t the definition of done is exactly when all your processes will start slowing down as conflicting incentives start to arise.

Your dev teams will report really successful sprints with loads of tasks completed, but your users will see no change to anything.

Remember our first principle: providing a service to the user is everyone’s ultimate goal!

#19 Practice Breaking Things on Purpose

Become the chaos developer.

Purposely code a (small and non-impactful) bug and push it to master…see how far it gets before it gets stopped.

(Make sure you have a good reversion strategy!)

Did it get to production? Did it affect end users?

Analyse why it happened, figure out how to prevent it from happening again. Document your findings and keep them for other teams to read and learn from.

#20 Ensure You Have an Exquisite Requirements Elicitation Process

There is no point in building something using feature flags and continuous testing in a fully cloud-native serverless environment with maximum observability using cross-functional teams…

...IF IT DOESN’T GIVE THE USER WHAT THEY WANT!

Finding out the real, nitty-gritty requirements is a difficult task. Often people go straight in for the design, imagining that they know what the user wants and needs.

Designs are not requirements!

A requirement might be that a user must be able to find a product on an e-commerce website within ten seconds (say).

A search bar would be a design to meet that requirement.

The search bar is not the requirement! Finding the product is.

All functionality must be able to be traced back to the atomic requirements that sparked its design and creation.

#21 Don’t Fear Failure; Or, Develop Effective Innovation Cycles

Effective innovation necessarily involves a few critical things.

One of those things is not fearing failure! Failure does not need to have an adverse effect on users (especially if you’ve followed #20!)

Another is to feel empowered to try new things. Then learn from your mistakes.

Finally (and most importantly), SPEAK TO YOUR USERS!

Invite them into your office. Develop with them sitting next to you.

Or even become your own user!

With these pieces in place, you can develop an effective innovation cycle that might look something like the below. Cycling between experiments, user feedback and learning; eventually ending up with a user-centric working product.

#22 Look After Your Team!

Your team has a range of needs: physiological, security, belonging, self-esteem and (if you’re lucky) self-actualisation. (Check out my earlier blog post The Developer’s Hierarchy of Needs! for a more in-depth examination).

Sadly, fancy offices with foosball tables and fun things on the wall, while all well and good, don’t really contribute to the complex needs essential for an engineer to really thrive.

Often, they just end up perpetuating an endless string of distractions that limits the attention span of developers, for whom attention to detail is of the utmost importance.

Here are a few ideas.

- Ditch the open office/hot desking! Give developers their own comfortable personal space to work in. Let them pick their own laptop/keyboard/mouse/monitor/mug warmer/slippers etc.

- Create natural collaboration spaces: You can then encourage intra- and inter-team collaboration by creating natural meeting spaces along the main routes that people are ‘forced’ to take in their movements around the office (by the kitchen/toilets for example).

- Build team relationships: build those relationships beyond the day-to-day. Take your team out to an escape room or cookery classes or go-karting!

- Determine values and vision: create a vision for everyone to buy into. Co-ordinate this with values that your teams aim to uphold and use to employ new members

- Create a team manifesto: co-create “rules” with your teams as a “manifesto” or working practises, things everyone expects everyone else to abide by. Review and update them regularly.

- Document the journey: appreciate that building a system or a product is a journey and, whether successful or not, that journey can become a kind of folklore that people can feel a part of. This journey may never end, new chapters are always being written and those members of your team are lead characters in it. Create a blog to document that journey, let everyone contribute and leave it accessible for those in years to come to read as part of their induction process.

- Appreciate that you don’t have all the answers!: Don’t be the alpha geek. You don’t know everything. Let your team adapt and find their own best practices. So long as everyone’s goals are aligned, your team will want to help make things better, but they won’t hang around long if you start barking orders at them. Coach them properly and show them the reasons behind the suggestions you are making.

#23 Take Your Time!

This is the final tip: take it easy! It’s a marathon, not a sprint.

The transition to cloud-native excellence is a long journey, not a sudden jump. Don’t expect your team to be able to do all of this from day one.

Slowly coach them through new processes.

Start by logging as much data as possible, lead times, Mean-Time-to-Recovery (MTTR), Mean-Time-to-Failure (MTTF), deployment frequency, customer satisfaction. Make it observable and watch it change over time based on the changes that you make.

Put these metrics on a screen on the wall of your office, make sure it isn’t a snapshot of the current state but a view of improvements over time.

Once metrics are on the wall, attempt to change the parts that bring the most value first. Get everyone involved in improving these metrics. Make it part of people’s objectives but also explain WHY you’re doing it.

Don’t just go around barking orders to do “trunk based development” to developers who have never done it before, using pipelines that are slow and insecure that deploy to multiple different environments before production.

And don’t delete all of your non-prod environments just because you’ve read this here. Try it out with your non-prod environment. Build synthetic traffic that best matches production and see how well you can deploy to it without causing failures.

Practice. Makes. Perfect.

Holy Cow. That’s a Lot of Stuff.

Good luck with your cloud-native adventures!